I've spent years in the analytics engineering space—including time as a Product Manager at dbt Labs—watching teams build incredible transformation pipelines with dbt Core. And dbt truly is excellent at what it does: it made SQL-based transformations modular, testable, and version-controlled. It brought software engineering practices to analytics and created a thriving community around transformation logic.

But here's what I kept hearing from teams: "We love dbt for our transformations... but what about everything else?"

The reality is that transformation is just one piece of the puzzle. Most teams running dbt Core in production end up building a complex stack around it:

- Airflow, Prefect, or Dagster for orchestration

- Custom scripts for monitoring and alerting

- Separate tools for data ingestion

- Manual playbooks for incident response

- Point solutions for data quality and observability

According to our DataAware survey, 50% of data engineering time is spent maintaining these fragile, disconnected stacks. That means half your team's effort goes to keeping the pipelines flowing instead of building new capabilities.

In this new AI era, this fragmentation becomes even more problematic. To leverage AI effectively and safely in data operations, you need more than just scattered tools—you need a unified system where AI agents have holistic context about your data, your code, and your operations. Point solutions can't deliver that. Agentic workflows require a platform built from the ground up with AI in mind.

That's why we built native dbt Core support into Ascend—but to give teams running dbt a path to a truly unified, agentic platform without starting from scratch.

What is Agentic Analytics Engineering?

Before diving into the technical details of the dbt integration, I want to define what we mean by "Agentic Analytics Engineering", because it's more than just AI code completion or chatbots.

Agentic Analytics Engineering is the practice of AI agents working alongside data teams across the entire DataOps lifecycle: from initial development and testing, through deployment and orchestration, to production monitoring and incident response. These agents understand context, make decisions, and take autonomous actions on behalf of the team.

But here's the critical part: truly agentic workflows require a unified platform.

Point solutions—an orchestrator here, a monitoring tool there, a separate IDE somewhere else—can't be truly agentic. Why? Because without a unified system, agents don't have the context, tools, and triggers they need to operate effectively.

Think about what an agent needs to autonomously handle a dbt model failure:

- Context: What changed in the code? What's the execution history? What's the data quality trend?

- Tools: Ability to modify code, re-run models, notify teams, update documentation

- Triggers: Real-time awareness that something went wrong and needs attention

If those capabilities live in five different systems, agents are stuck—they can suggest actions in a chat window, but they can't actually do anything.

The Intelligence Core: Metadata + Tools + Automation

This is why we built Ascend around what we call the Intelligence Core—a unified architecture with three key elements:

1. Unified Metadata: Every aspect of your data operations captured in one place

- Code changes and lineage

- Execution history and performance

- Data quality patterns and anomalies

- User actions and deployment events

2. Agentic Tools: Capabilities that enable agents to take action based on that metadata

- Edit code and configurations

- Trigger pipeline runs

- Coordinate across external systems (via MCP servers)

- Update documentation and tests

3. Automation Engine: Orchestrates both data workflows and agent workflows

- Manages pipeline dependencies and execution

- Fires triggers that activate agents

- Enables event-driven responses across the platform

This unified foundation is what makes Agentic Analytics Engineering possible. And it's what makes our dbt Core integration fundamentally different from traditional orchestrators. Otto doesn't just run your dbt models, it understands them, monitors them, and can act on them autonomously.

Your New Favorite Coworker: Otto

Let's talk about Otto, Ascend’s built-in AI Agent. Otto is designed to be a coworker who understands your entire data operation and works both interactively when you need help and autonomously in the background. There are four key ways to leverage Otto across your dbt workflows:

1. Chat with Your Stack

Otto provides a natural language interface to interact with your entire dbt project and its operational history. You can ask questions like:

- "What happened with last night's dbt run?"

- "Which models are running slower than usual?"

- "Show me all models that depend on the raw_orders table"

- "Why did the customer_lifetime_value model fail?"

- “What data quality tests should I add to this model?”

Otto doesn't just query your code—it has access to the Intelligence Core, which means it understands:

- Execution history and performance trends

- Dependency relationships across models

- Recent changes and who made them

- Data quality patterns and test results

When you ask "which models are slowing down my pipeline?", Otto can show you execution time trends over weeks or months, identify regressions ("this model has gotten 3x slower since last month"), and suggest specific optimizations based on your warehouse platform.

2. Inline Code Assistance

As you write models, Otto works directly in your code editor to suggest improvements, generate boilerplate, and catch issues before they reach production.

Otto understands dbt-specific patterns—proper use of ref() and source(), Jinja templating conventions, materialization strategies. When Otto suggests changes, it preserves your code style and follows your project's conventions.

3. Build Custom Agents and Rules

Want Otto to enforce specific standards or automate repetitive workflows unique to your team? You can build custom agents and rules tailored to your operations.

Custom Agents allow you to configure system-level prompts so you can deploy agents tailored to your workflows:

- Generate documentation when models change

- Analyze performance and suggest improvements

- Review code for compliance with conventions and regulations

Custom Rules ensure Otto aligns with your team's requirements:

- Enforce naming conventions for models and columns

- Require specific tests for PII columns

- Mandate documentation for models in production

- Validate that incremental models include appropriate filters

Here's a simple example of a custom agent configuration:

Check out our docs on custom agents for more examples and configuration guides. We're building a library of pre-built agents for common dbt scenarios and welcome community contributions.

4. Agentic Incident Response

This is where Otto moves from helpful assistant to autonomous operator. Using Ascend’s automation engine, users can configure Otto to act based on event-driven triggers. This becomes especially powerful when you allow Otto to agentically respond to errors.

Example Scenario: 2 AM Schema Change

An upstream source table schema changes, breaking several downstream dbt models. Here's what happens:

- Detection: Otto detects the failures immediately via Intelligence Core monitoring

- Diagnosis: Analyzes errors, checks Git history, compares current vs. previous schemas

- Root Cause: Traces failures to the specific schema change

- Proposed Fix: Updates model definitions to handle the new schema

- User Approval: Presents the fix for review (nothing deploys without approval)

- Execution: After approval, re-runs affected models and validates success

- Coordination: Updates team via Slack, creates incident record in PagerDuty

MCP Server Integration:

Otto can coordinate responses across your entire toolstack using MCP (Model Context Protocol) servers:

- Post diagnostics to Slack channels

- Create PagerDuty incidents with full context

- Open GitHub issues with proposed fixes

- Update status pages

- Trigger custom webhooks

How the dbt Integration Works

Now let's get into the technical details of how you actually work with dbt Core in Ascend.

A. Bringing Your dbt Project into Ascend

Getting started is straightforward—Ascend treats your dbt project like any other code repository.

Git Integration: You can connect your existing Git repository to Ascend. Your dbt project structure remains standard—no special configurations or restructuring required. All your normal Git workflows continue to work: branches, pull requests, commits, code reviews.

dbt Application: Once you’ve attached your desired Git repository, you can create a dbt Application within Ascend with a simple yaml configuration:

Warehouse Connection: Next, you connect Ascend to your data warehouse (Snowflake, BigQuery, Databricks, or MotherDuck). This connection is where your dbt models actually execute. Credentials are securely stored in vaults, and the same connection is used for all model runs.

B. Execution and Orchestration

Where dbt Actually Runs: When Ascend executes your dbt models, the computation happens in your cloud data warehouse using Ascend runners. There's no data movement, no separate compute layer, everything runs natively in your environment. You maintain full control over warehouse resources and permissions.

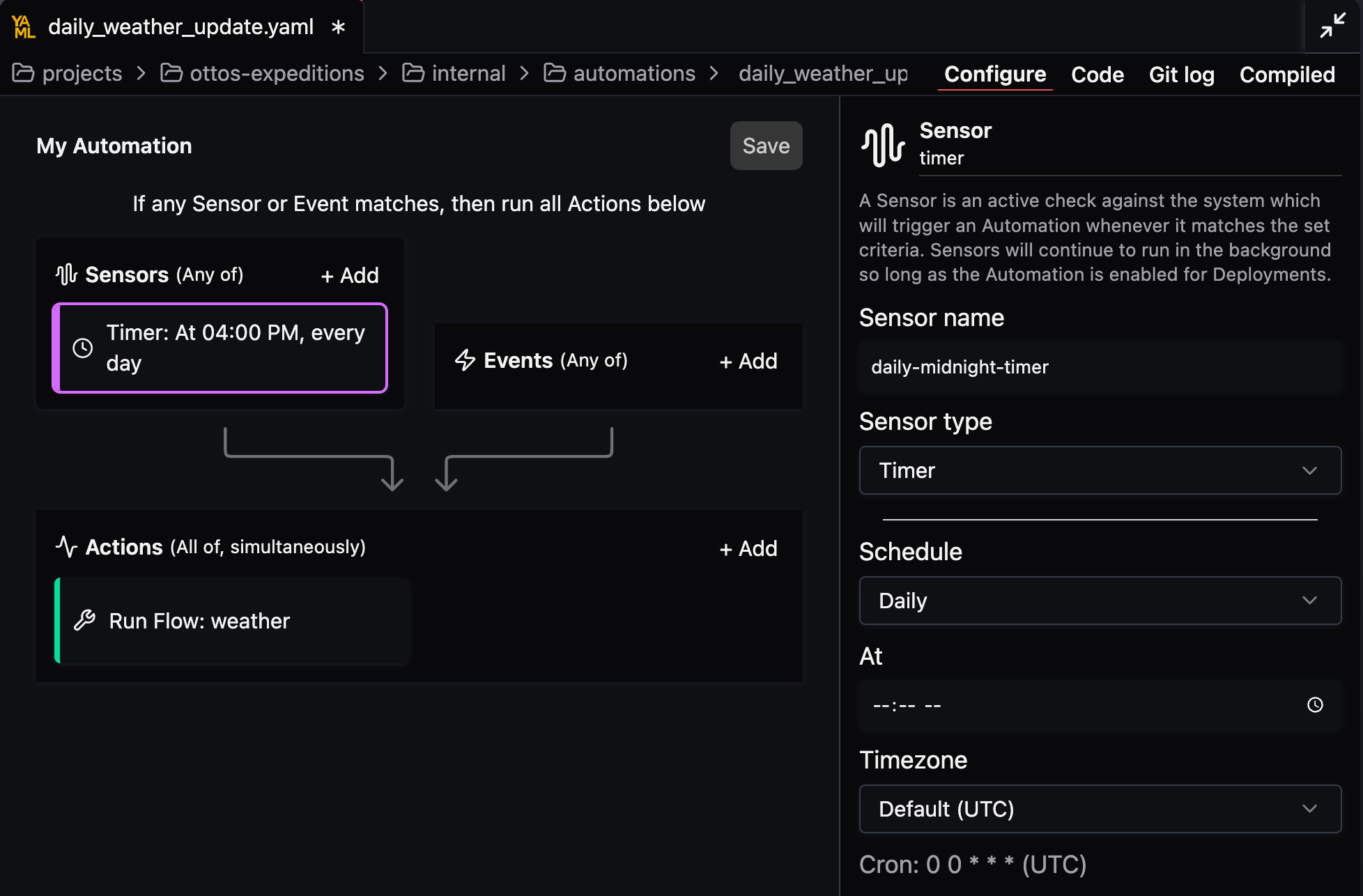

Triggering Runs: Ascend gives you flexible options for orchestrating dbt models:

- Manual Runs: Kick off runs on-demand from the UI—useful for development and testing.

- Scheduled Runs: Traditional time-based triggers (hourly, daily, etc.) for predictable workloads.

You can use a form-based UI or yaml to configure your orchestration. For example the following configuration runs a specific flow daily at 12:00am UTC:

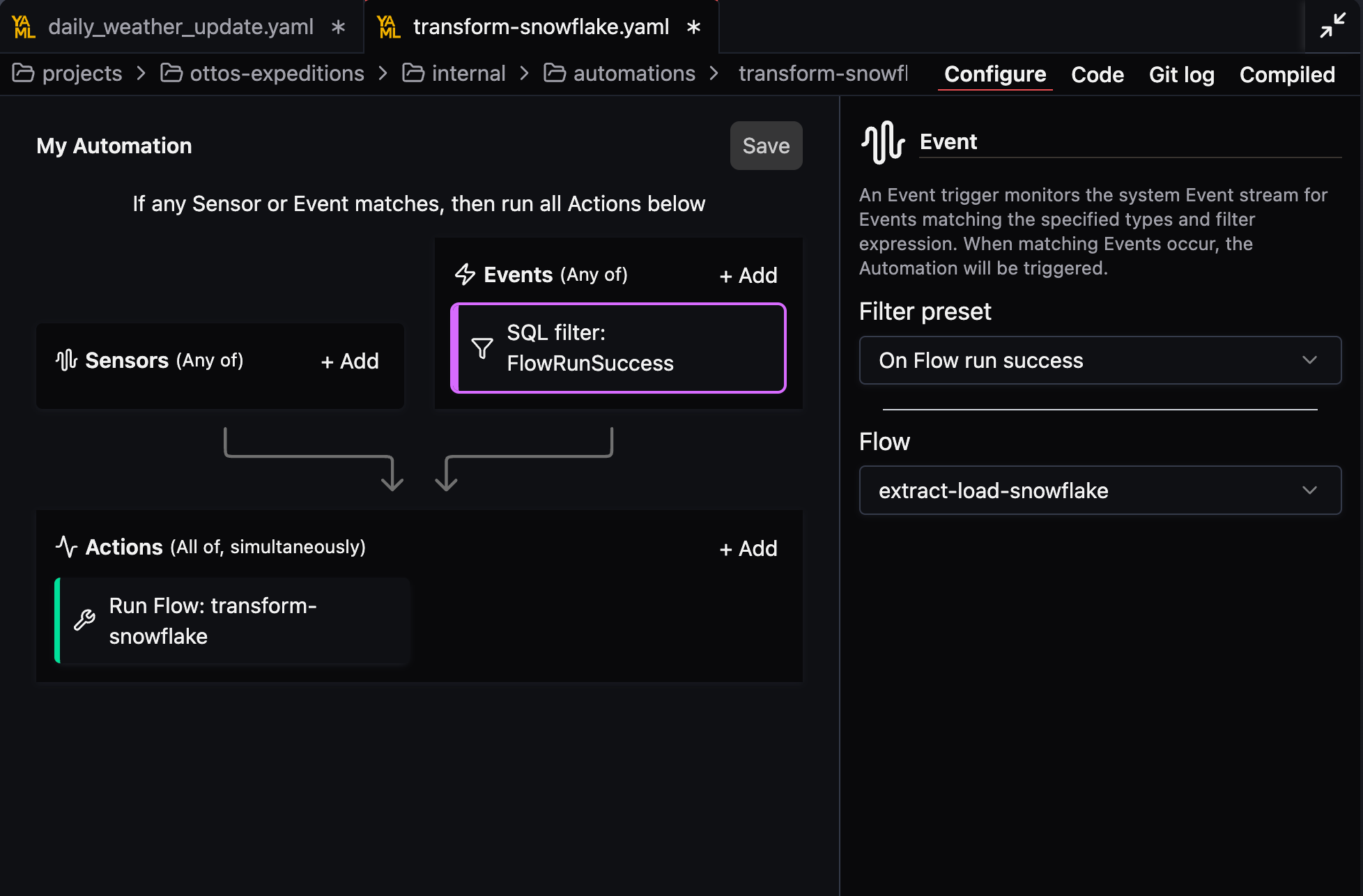

- Event-Based Triggers: This is where things get interesting. You can configure dbt models to run based on real events:

- Upstream dbt model completes

- Custom webhooks or API calls

- A pipeline failure

This configuration executes a downstream flow after an upstream flow has successfully completed:

Optimization built in: You're not limited to running your entire dbt project. You can:

- Execute specific models or tags

- Run only changed models (incremental processing)

- Apply Ascend's smart processing for intelligent execution optimization

The flexibility here means you can orchestrate dbt the way that makes sense for your workflows—whether that's simple schedules or sophisticated event-driven pipelines that react to your data in real-time.

Getting Started

The dbt Core integration is available now in private preview—and I'd love for you to be part of shaping how it evolves. You can sign up for early access here.

Hands-On Lab: Agentic Analytics Engineering with dbt Core

The best way to see Otto in action is through our upcoming hands-on lab. You'll:

- Connect your dbt project (or use our sample projects)

- Configure intelligent orchestration and event-based triggers

- Deploy and run your dbt project in a production environment.

Register for the hands-on lab:

.png)

Wrapping it up

dbt transformed how we think about data transformation—bringing software engineering practices to analytics at scale. Now, with Agentic Analytics Engineering, we're extending that same elegance to the entire data operation.

It's about giving you a platform that understands your dbt code, learns from your operations, and works alongside your team—so you can focus on delivering data products that drive real business value.

Join the preview, try Otto on your dbt projects, and help us shape the future of analytics engineering. https://www.ascend.io/event/agentic-analytics-engineering-with-dbt-core

.webp)

.webp)

.webp)

.webp)

.avif)