Ship data products faster with a unified data engineering platform

.webp)

.avif)

Built-in compliance, audit trails, and role-based access controls

.avif)

Deploy data pipelines with CI/CD and GitOps workflows

Fine-grained RBAC with environment-level isolation

.avif)

One-click export or links to share directly with stakeholders

.avif)

Built-in compliance, audit trails, and role-based access controls

.avif)

Deploy data pipelines with CI/CD and GitOps workflows

Fine-grained RBAC with environment-level isolation

.avif)

One-click export or links to share directly with stakeholders

How Ascend Helps:The TL;DR

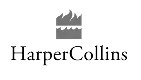

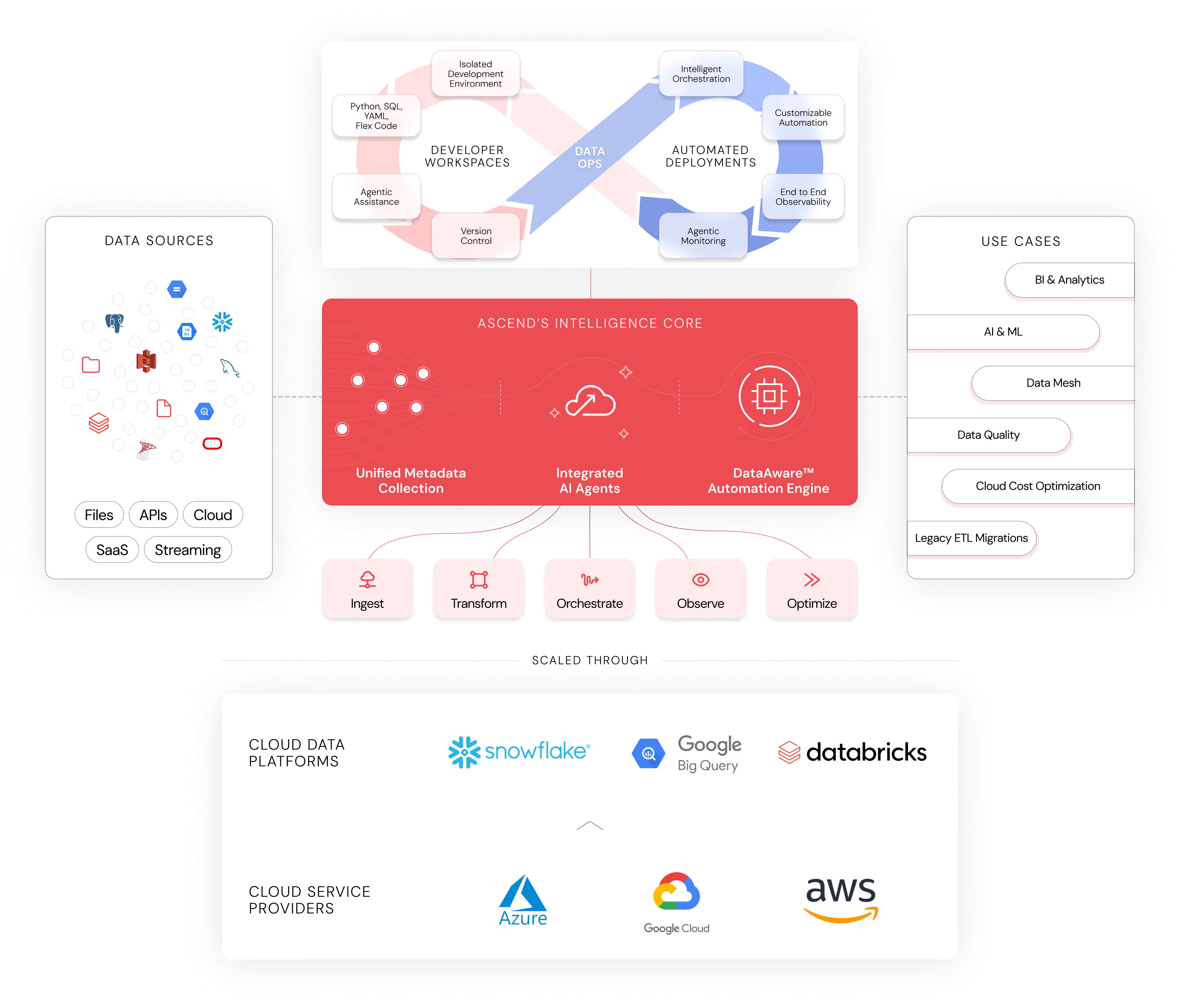

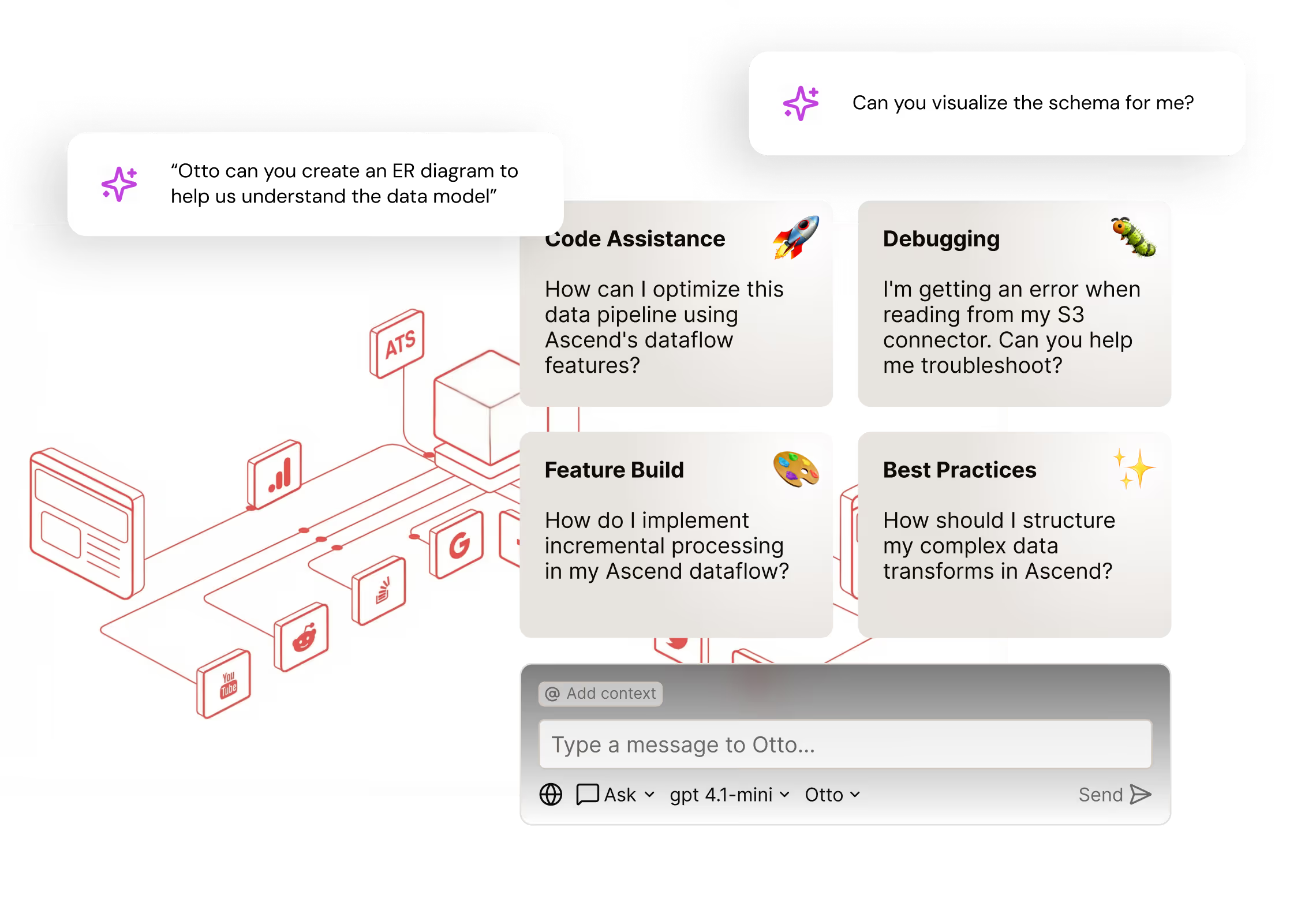

The AI Data Engineering Platform (That Actually Understands Your Stack)

Pipelines at Scale

Use code when you want it, clicks when you don’t. Ascend combines integrated AI agents and native GitOps for seamless team collaboration.

Ascend automates orchestration using rich metadata and event-driven triggers so pipelines run only when needed, and custom logic is easy to build in.

Monitor pipeline performance, trace lineage end-to-end, and debug faster with real-time, metadata-powered insights.

Run faster, spend less. Ascend uses metadata and fingerprinting to cut unnecessary compute without sacrificing performance.

Proven Results. Measurable Impact.

"Ascend is super intuitive and easy to use. In just a matter of weeks, we overcame a challenge that we'd been struggling with for over 9 months... We've only used 10% of the functionality so far, which is very promising in terms of what we can accomplish next."

With Ascend, we’ve been able to keep our data engineering team small and nimble, while attaining world-class results. That translates to more funds for other initiatives, and an outsized ROI.

"With Ascend, the tech just worked and the people just worked. The relationship with Ascend is fantastic, which makes our lives so much easier."

"Ascend has transformed our data processing capabilities, allowing us to deliver valuable insights based on more data, more rapidly. It has been a game-changer for our business."

Let’s give your team their time (and sanity) back.

Free Up Analytics and Data Engineering Time

%202.avif)

Frequently Asked Questions

%202.avif)

Traditional ETL platforms rely on stiching tools together, with limited flexibility and static workflows. Furthurmore, traditional tools weren't built to leverage agentic capabilities that enable teams to move faster. Ascend offers an AI-native, developer platform where teams can build and deploy end-to-end pipelines with agentic assistance. By unifying data pipeline development within Ascend, teams reduce maintenance, improve observability, and accelerate their time to production, while leveraging AI to automate ruotine tasks.

Ascend uses rich metadata to automate pipelines and provide deep context to intelligent agents. This approach goes beyond rigid orchestration tools by providing intelligent optimization, only repocessing data impacted by changes to logic or the data itself. Furthurmore, embedded agents offload work from teams by monitoring pipelines and responding to system triggers such as pipelines errors dynamically.

Ascend significantly reduces manual data engineering effort by automating common data operations like orchestration, documentation, and pipeline optimization. Embeded AI agents also boost team productivity from design to deployment. Many teams report up to a 95% reduction in manual work — letting engineers focus on higher-value work like building new data products.

Ascend reduces infrastructure costs by minimizing unnecessary compute through intelligent pipeline optimization. It uses metadata to eliminate redundant runs, avoid reprocessing, and automatically scale workloads based on real-time data changes. Customers often report significant savings across platforms like Snowflake, Databricks, and BigQuery.

Ascend is designed for teams of all sizes. Startups benefit from fast onboarding, flexibility, and intelligent automation, while enterprises leverage its scalability, governance, and security. It scales with your team and complexity.

Ascend users report: 83% reduction in data processing costs, 87% faster data processing times, and 7x boost in engineering team productivity. These gains come from automating previously manual work and freeing teams to focus on innovation.

Most teams go from signup to running production pipelines in under a week. Ascend offers guided onboarding, out-of-the-box connectors, and AI-powered setup assistance to help you move fast, with no professional services required.

Ascend often costs significantly less than building and maintaining in-house infrastructure. Instead of stitching together multiple point solutions for orchestration, lineage, monitoring, and deployment, Ascend unifies all these capabilities into a single platform. It also intelligently reduces compute usage through features like Smart Tables and metadata-driven optimization, helping teams cut both engineering hours and cloud platform costs. According to an independent ESG study, Ascend delivers 500-700% productivity improvements compared to traditional data stacks. Organizations typically eliminate $156K in annual tooling costs and achieve 80% faster pipeline development.

Ascend pricing is based on monthly credit usage. Credits are consumed based on how long pipelines are running. We charge monthly minimums based on the number of deployments and active users—these minimums cover the underlying platform infrastructure and are converted to run time credits. This ensures that teams don't pay extra runtime charges on top of their minimums, and get predictable, scalable pricing as usage grows.

Yes. While Ascend is a self-serve platform, we offer onboarding support and migration assistance for teams moving from tools like Airflow, dbt, or legacy ETL platforms. With Ascend's embedded agents, teams can also migrate from legacy tools with AI-powered assisstance. For customers modernizing their data stack, we can provide hands-on help in architecting and implmenting data pipelines.

.avif)

.webp)

.webp)

.webp)

.avif)