Ascend gives you everything you need to build and deploy data pipelines end to end. On the platform, you can:

1. Ingest data from a wide range of sources using pre-built connectors

2. Transform data with low-code and full-code options

3. Orchestrate workflows with intelligent scheduling and automation

4. Monitor pipelines in real time with built-in observability and error handling

5. Optimize performance by processing only changed data

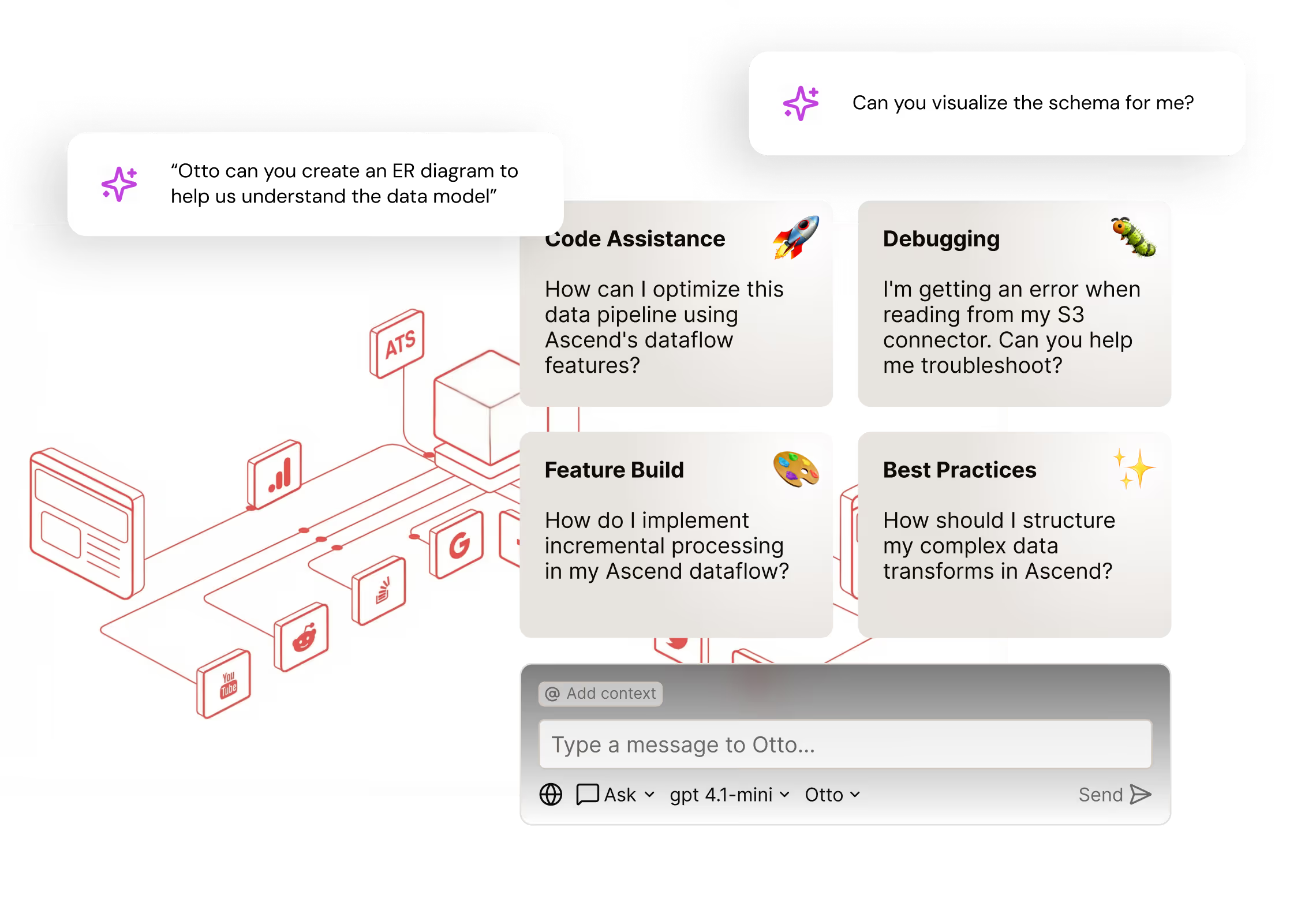

6. Leverage AI to speed up development with code suggestions, auto-documentation, and smart troubleshooting

7. Collaborate securely with native Git integration, role-based access control, and audit trails

It’s a complete platform for designing, deploying, and scaling reliable data products without stitching together multiple tools.

.webp)

.avif)

.avif)

.avif)

.avif)

.webp)

.webp)

.webp)

.webp)

.webp)

%202.avif)

.avif)