About this Hands-on Lab

January 21, 2026 | 10am PT / 1pm ET

Learn to build, run, and manage dbt models with AI—from SQL generation to production orchestration. In this 45 minute session, you'll learn how to use AI agents to streamline development, deployment, and maintances. We'll walk through a real analytics project where you'll:

- Use AI agents to generate dbt models, tests, and documentation from natural language

- Deploy and orchestrate your dbt pipeline with automatic dependency management

- Debug and refactor models automatically with agentic incident response

By the end of this lab, you'll have a production-ready dbt project with automated orchestration and the skills to apply agentic workflows to your own analytics engineering.

Who This Is For

This lab is designed for:

- Analytics engineers currently using dbt Core who need better orchestration and automation

- Data engineers managing dbt projects in production who are tired of manual pipeline maintenance

- dbt users curious about using AI to accelerate both development and operations

Jenny Hurn:

Hello, everybody, and welcome to today's hands-on lab on agentic analytics engineering with dbt core. We're so excited that you're here.

Quick introduction. My name is Jenny Hurn. I am our chief of staff here at Ascend.io. And with me on the call, I have Cody Peterson, who is our product manager.

Cody, do you want to just head to the next slide real fast for us? Quick fun fact about Cody. Cody is a former product manager at dbt Labs. So very, very familiar with dbt. We're so excited to get your insights, not just on data and analytics engineering with dbt, but also just this AI kind of future that we live in. You are someone who thinks about AI very deeply and has so much experience in how we leverage AI to be really effective. So I'm so excited for people to hear from you today.

A couple of quick logistics for everyone. We're, again, so excited that you're here. We see a ton of people pouring in. Feel free to say hi in the chat. We have that poll that you can fill out that's just how familiar or how comfortable are you with dbt, what's your level of familiarity with dbt. That just gives us a sense of where everyone's coming into and how we want to talk through different things as we go through today.

And then one other logistic thing to point out is we do have a Q&A tab, and you can go ahead and throw questions in the Q&A tab as we go if you have questions as we are building, as we are operating, as we are talking through things. I'll be keeping my eye on the Q&A tab and the chat, and I can interrupt Cody as we need to if anyone's stuck anywhere or needs help with anything. We will also try to leave some room at the end of this time for Q&A as well.

So, again, feel free to use all of these fun features of the platform. Really excited. It's fun to see. We see folks from Canada, and, yeah, thanks for saying hi.

Alright. Getting started, Cody. I think some of the things we're talking about today are the architectural fundamentals that you need to really leverage AI as an analytics engineer, as a data team. But before we get into that, can you tell us how you think about AI? Like, I mentioned, you're someone who thinks about AI quite deeply. So what's on the top of your mind as you think about AI these days?

Cody Peterson:

Yeah. I think about it a lot. Prior to being a PM at dbt, as you mentioned, I actually started my career in AI, in Microsoft's AI platform. So it's been incredible to watch over the last, really five-ish years from the initial launch of GitHub Copilot where you kind of had your first AI assisted programming, to really what we've seen in the last two years and really the last six months, with agents really taking hold, especially in software engineering with things like Cursor, things like Claude Code.

So I spend a lot of my time thinking in the data space. How can we use AI to really make analytics engineering and data engineering less of the drudgery that we see in a lot of day to day and more of the high value, architecture and, really just providing value for businesses and organizations. So, that's really what excites me, being able to ship and do a lot more, much faster than ever before.

Jenny Hurn:

Yeah. And I think we're seeing that across different teams in different across our organizations. Right? So if you go to that next slide, Cody, we see things like, you know, all these different parts of our organization are leveraging AI. What do you think about how these things are progressing?

Cody Peterson:

Yeah. Things are progressing very fast. These numbers and studies that we're referencing are probably even out of date today.

But you see across domains like software engineering, which tends to kind of lead ahead of data engineering and analytics engineering. Agents are writing and debugging code much faster than humans could on their own before. Again, with tools like GitHub Copilot, which are even now a bit dated, they're being updated to be more agentic, things like Cursor and Claude Code.

We're also seeing a bunch of AI adoption in less technical domains, marketing, support, HR, sales, where, again, people are really able to do less of the kind of grunt work, sending automated things, optimizing your pipelines, responding to kind of that first pass of support queries. So we're really seeing success all over the place.

But for a lot of teams, sometimes in software development, depending on kind of your geography in your organization, but especially in data engineering and analytics engineering, you might feel behind. So this is where we as a product, as Ascend, would like to kind of give you this baseline platform that helps you really speed up your journey toward agentic analytics engineering.

Jenny Hurn:

So with that in mind, can you give people a frame of reference of how we think about what is agentic analytics engineering or agentic data engineering?

Cody Peterson:

Yeah. So we've talked a lot about agents. We've talked about AI. We've talked about analytics engineering. We've talked about data engineering. How do these all relate?

So really what we're thinking is AI agents plus analytics engineering equals agentic analytics engineering. We're starting to see a shift in the data space from kind of these traditional batch scheduled pipelines that you're used to. You schedule a dbt pipeline every night at midnight or every hour. And we're starting to see things shift more to this event-driven, agentic orchestration.

So you can have triggers based on new data coming in. You can have triggers based on schema changes. You can have triggers based on errors and anomalies. And you can have AI agents respond to all of these things. So that's really what we're seeing as kind of the future of agentic analytics engineering.

Jenny Hurn:

Yeah. And I think when we consider, like, what are the architectural fundamentals that you need to really enable this agentic future, there's a couple things that stand out. Right? So if you go to the next slide, Cody, we see things like you need your data infrastructure, you need your orchestration, you need your AI agents, and you need your version control. Can you talk a little bit about each of these and why they're important?

Cody Peterson:

Yeah. So starting with data infrastructure. This is your standard databases, your data warehouses, your data lakes. This is where your data lives. This is Snowflake, Databricks, BigQuery, DuckDB, whatever you're using. You need that foundation.

You need orchestration. So orchestration is how you actually run your pipelines, how you schedule them, how you trigger them. This is traditionally tools like Airflow or Dagster. But what we're seeing now is orchestration that's much more event-driven and agentic.

You need AI agents. So these are the things that are actually going to be able to understand your data, understand your pipelines, and take actions on them.

And then you need version control. This is Git. This is GitHub, GitLab, Bitbucket. This is how you track changes to your code, how you collaborate with your team, how you ensure that you have a history of what's happened and you can roll back if needed.

Jenny Hurn:

Yeah. And I think what's exciting about this is when you bring all of these pieces together, you get something that's really powerful. And I think we're going to see that today in the hands-on lab. So let's go ahead and jump into the demo. Cody, do you want to share your screen and walk us through what we're going to be building today?

Cody Peterson:

Yeah. Absolutely. So let me share my screen here. Alright.

So what we're going to be building today is a simple dbt project that's orchestrated through Ascend. And we're going to show how Otto, our AI agent, can help us with things like monitoring, responding to errors, and even making changes to our dbt models. So let's get started.

First thing I'm going to do is I'm going to create a new workspace in Ascend. So I'm going to go to the home page here, and I'm going to click "Create Workspace." I'm going to call this "dbt Demo." And I'm going to use our Ascend managed Git repository for this. In a production environment, you'd probably want to connect your own GitHub or GitLab repo, but for today, we'll just use the managed one to make it easy.

Alright. So now I have my workspace created. The next thing I'm going to do is I'm going to create a new data flow. So I'm going to click "Create Data Flow." And I'm going to call this "Customer Orders." And what this data flow is going to do is it's going to take some raw customer data and some raw order data, and it's going to join them together using dbt.

So let me set up the sources first. I'm going to add a DuckDB source. DuckDB is just a really lightweight in-memory database that's great for demos. I'm going to call this "Raw Customers." And I'm going to paste in some sample data here. Let me grab that. Alright. So I've got some customer data here with customer ID, name, and email.

And then I'm going to do the same thing for orders. I'm going to add another DuckDB source. I'm going to call this "Raw Orders." And I'm going to paste in some sample order data. Alright. So now I have my two sources set up.

The next thing I'm going to do is I'm going to add a dbt component. So I'm going to click "Add Component," and I'm going to select "dbt Core." And this is where the magic happens. So Ascend has a native dbt integration. You can write your dbt models right here in the platform, or you can sync them from your Git repository.

For today, I'm going to write a simple model right here. So I'm going to create a new model. I'm going to call it "customer_orders.sql." And I'm going to write a simple SQL query that joins customers and orders together. Let me write that out.

Alright. So I've got a simple query here that selects from my raw customers and joins them with my raw orders on customer ID. And now I can run this. So I'm going to click "Run," and Ascend is going to execute this dbt model. And you can see it's running. It's pulling the data from my sources, it's running the dbt transformation, and it's writing the output. And there we go. We've got our customer orders table created.

So that's the basics of how you set up a dbt project in Ascend. But now let's talk about the agentic part. So one of the cool things about Ascend is that we have Otto, our AI agent, built right into the platform. And Otto can do things like monitor your pipelines, respond to errors, and even make changes to your dbt models. So let me show you an example.

Let's say one of my source tables changes. Maybe the schema changes or maybe there's a data quality issue. Otto can detect that and respond to it. So let me simulate that. I'm going to go back to my raw customers source, and I'm going to change the schema. I'm going to add a new column called "phone_number." And now when I run my pipeline again, it's going to detect that schema change. And Otto is going to be able to see that and respond to it. So let me run this.

Alright. So now you can see Otto detected that there's a schema change. And Otto can actually make suggestions on how to handle that. So Otto might suggest updating the dbt model to include that new column, or Otto might suggest creating a new model that uses that column. And you can choose to accept Otto's suggestions or not. So that's one example of how Otto can help you with monitoring and responding to changes.

Another example is error handling. So let's say there's an error in one of my dbt models. Maybe there's a syntax error or maybe there's a data quality issue. Otto can detect that error and help you fix it. So let me simulate that. I'm going to go into my customer orders model, and I'm going to introduce a syntax error. I'm going to misspell "customer_id." I'm going to spell it "custome_id" without the R. And now when I run this, it's going to fail.

Alright. So you can see it failed. There's an error. And Otto detected that error. And Otto can actually help you fix it. So Otto can say, "Hey, it looks like you have a syntax error here. Did you mean 'customer_id' instead of 'custome_id'?" And you can accept that suggestion, and Otto will fix it for you. So that's how Otto can help with error handling.

And then the last thing I want to show you is how Otto can help with making changes to your dbt models. So let's say you want to add a new column or you want to change the logic of a model. You can actually ask Otto to do that for you. So let me show you an example. I'm going to open up the Otto chat here. And I'm going to say, "Otto, can you update the customer orders model to include the phone number column from the raw customers table?"

And Otto is going to understand that request, it's going to go into the dbt model, it's going to update the SQL to include that phone number column, and it's going to save it. So let me send that. Alright. So Otto is processing that request. And you can see Otto went into the model, updated the SQL to include phone_number, and saved it. And now when I run this pipeline, it's going to include that phone number column. So that's how Otto can help you make changes to your dbt models.

So those are just a few examples of how you can use Otto with dbt in Ascend. There's a lot more you can do. You can connect Otto to Slack so Otto can notify you when things happen. You can connect Otto to other tools using MCP servers. You can set up custom triggers and automations. So there's a lot of power here. And we're really excited to see what people build with this.

Jenny Hurn:

Yes. Thanks so much, Cody. I think it's pretty exciting to consider, like you said, the broader implications. Today, we did a pretty quick, fast paced. Someone said, yeah, it was fast paced. There was a lot to keep up with. It was also pretty simple. Right? Like, it's just we orchestrated a flow and we deployed it.

But I think when we consider the implications of what we can do when we string together some of these workflows where agents have the ability not just to understand the context of our ecosystem because it sees all the code in our repository, it sees all the run history. It sees all the data, the metadata associated with these tables. But we can also connect it to tools that allow it to take action.

And so things like MCP servers where it can connect to Slack and create those things or, even we were seeing it do tool calls within Ascend where it could do things like run flows or list flows or list files. So having that context of the agents, having the tools that it can operate with, and then having the triggers of that orchestration system where it can use system events to really trigger those agents to do things, I think, makes a lot of sense. And, hopefully, people get just a small taste of it even though it's a really quick session today.

I know we are almost at time. If anyone has any questions, feel free to throw those in the Q&A tab. We do have we have been answering questions as we've gone. We had a lot of questions on Git and how that kind of operates. And one thing I did want to point out, Cody, maybe you can talk to this more, is, obviously, we had sort of a limited GitOps flow within the Ascend platform of being able to merge main into a branch or being able to deploy into a deployment via a branch. Can you talk a little bit about how teams would use this with their own GitOps? Maybe they already have their own GitOps flow that they like to follow. How can they use those things and still work with Ascend?

Cody Peterson:

Yeah. So we take an approach where we basically make it easy to get started. So as you saw, we have Ascend managed repos, but you can basically connect your own repo, whether that's GitHub, GitLab, Bitbucket, and basically use your existing branch protections, use your existing PR process, use your existing CI there.

So it really just integrates very nicely, and that's kind of the approach we try to take is give you a nice and easy like, you know, we ship DuckDB so that you can just run things immediately. But we integrate with Snowflake. We integrate with Databricks. We integrate with BigQuery, and we integrate with all of your repos and GitOps workflows. Yeah.

Jenny Hurn:

Yeah. And we would really recommend, typically, in a production environment, you'd probably connect to your own GitHub or GitLab. Cool. I know we're at time.

A quick note here. Feel free to scan that QR code or you can click this button to book a demo with our team if you're curious to meet with us and learn more about how this might work in your own kind of environment.

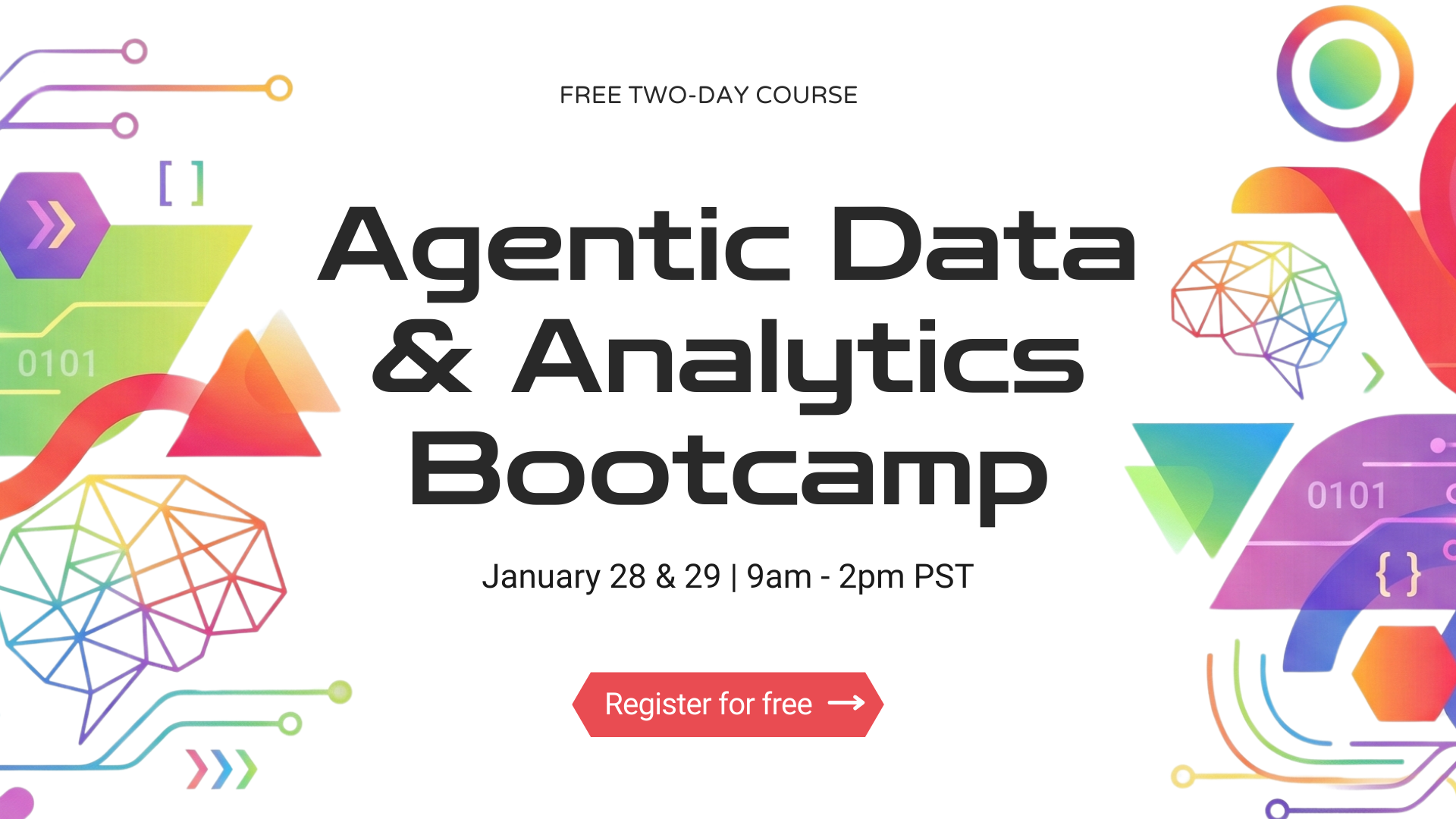

And then one more extended invitation to everyone. Next week is our Agentic Data and Analytics Bootcamp. We've teased it a couple times here. It'll be basically ten hours of content. So today was really fast. Right? Forty-five minutes to try to get through a bunch of the stuff. We'll have much more time next week to really go a little bit slower, really dive in really nitty gritty into how you would do some of these things within the Ascend platform and also just general frameworks that you might want to consider about working with AI as a data and analytics engineer.

But we also have some really exciting releases in conjunction with that bootcamp that we kind of wanted to share with everyone before we get off the call today. So, Cody, I'll let you share some of those things.

Cody Peterson:

Yeah. I am so excited for these. So you may have seen the home page, which I, the new home page, which I flashed up. With that, we're going to have a ton of new agentic features. I saw in the chat, like, having permissions and being able to approve things that Otto does, giving Otto access to write SQL queries for you more natively right in the platform.

Also, data visualization will be huge. So, often, we see people build dashboards in Tableau or Power BI on top of data flows that you orchestrate through Ascend. But you'll be able to do that in a lot of cases just directly in the app and send your colleagues a link and get those dashboards right there, really making Ascend that all-in-one platform so you don't have to stitch anything together.

And then the big one for everyone as well is we're going to be launching a new starter plan, exact name and pricing TBD, but the intent is to have a low-cost monthly subscription so you can really play around with Ascend, use our agents, use the workspaces. These will have deployments turned off really just more for getting started, getting familiar, and really going through that agentic journey that so many people are going through.

We're really passionate about getting analytics engineers, data engineers really up to speed in the same way as we saw with software engineers and many others. And, of course, the dbt integration. We are now in public preview as of today and are going to aim to make this generally available, iron out some of the kinks. Please do report any bugs to us, but going to aim for GA in February or possibly in March. So lots of great stuff coming down the pipe.

Jenny Hurn:

Awesome. Well, thank you so much everyone for the time today. Really appreciate it. I know we're a couple minutes over too. So thanks so much to you, Cody, for walking us through this. Really fun to see. You know? I know people love dbt, and so being able to integrate this really intelligent system that can work with their dbt models, monitor those things, respond to things is really exciting.

And we will see everyone next week at the Agentic Data and Analytics Bootcamp. I'll send a follow-up email later today with an invite to that in case you haven't registered already as well as a couple follow-up docs if you're interested in getting any more information or trying any other things this week. Have a great day, everyone, and we look forward to seeing you next time.

.png)

.avif)