What happens when you give domain experts a powerful agentic data platform and 1 week to solve real-world problems? You get production-grade pipelines that automate regulatory compliance, surface investment opportunities across the country, and translate macroeconomic data into plain-English narratives for investors.

Our recent Hackathon challenged participants to build end-to-end data pipelines using Ascend’s platform and its agentic AI assistant, Otto. The results went far beyond prototypes—our three winners shipped working systems that demonstrate the real power of agentic data engineering. Here’s a look at what they built and what they learned along the way.

First Place Winner: Bernadine Pierre

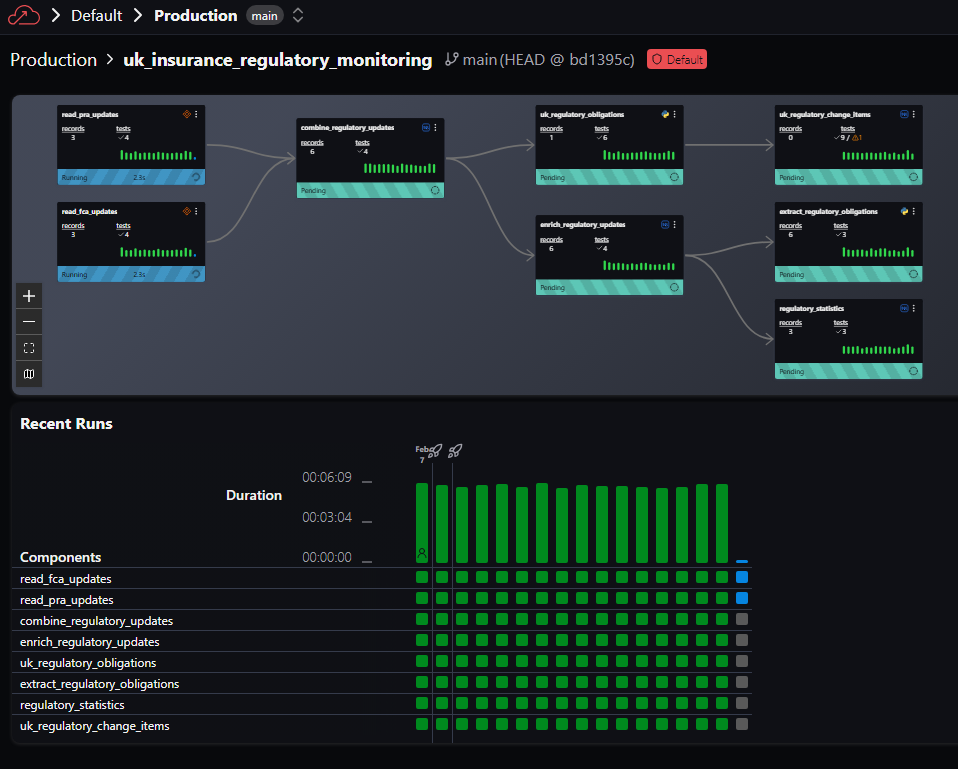

UK Insurance Regulatory Monitoring Pipeline

Bernadine built a deployed, scheduled, agentic data pipeline that automates the entire regulatory interpretation workflow. The pipeline ingests publications from the UK's Financial Conduct Authority (FCA) and Prudential Regulation Authority (PRA), then uses a custom AI agent—built with Ascend's agentic framework—to extract enforceable obligations from dense regulatory text and send insights to key stakeholders.

How it works

The pipeline runs daily at 6:30 AM UK time and follows a clear sequence. It begins by ingesting the latest FCA and PRA publications through read connectors, then normalizes and chunks the text to isolate obligation-bearing language.

From there, a custom regulatory interpretation agent converts the text into structured JSON obligations with verbatim citations. Each obligation is scored on a 0–100 impact scale based on regulatory severity, scope, urgency, and Consumer Duty risk. Finally, obligations scoring 70 or above are automatically escalated via email alerts to the relevant compliance teams.

Check out her project on Git Hub →

What makes it stand out

As a use case for agentic data engineering, we were inspired by Bernadine's approach. Compliance work is often manual and tedious. Leveraging data ingestion to track relevant data and layering that with intelligent systems empowers teams to prioritize their work effectively.

Furthermore, the execution of the pipeline was thoughtful and creative. Agents were deliberately constrained to UK insurance regulation only, preserving FCA and PRA intent without inference. Every output traces back to its source publication, and the entire pipeline writes to Snowflake for reporting and audit trails.

"Agentic AI is most valuable in regulated environments when tightly constrained. Governance and explainability matter more than free-form generation. Ascend and Otto significantly reduced the time from idea to deployed pipeline while maintaining audit discipline."

— Bernadine Pierre

Second Place Winner: Prashant Appukuttan

Real Estate Investment Prospecting Dashboard

Prashant and a co-investor currently manage a duplex in Norfolk, Virginia, and wanted a data-driven way to identify where to invest next. Rather than staying limited to their local market, they used Ascend and Otto to build an end-to-end analytics platform that evaluates opportunities nationwide.

How it works

The system is structured around three core workflows. The first establishes a geographic baseline by pulling US Census Bureau data (population growth, migration inflows) and regulatory data across all 50 states to calculate a Market Viability Score (MVS).

The second workflow drills into county-level analysis, scoring individual counties on investability using rent-to-price ratios, growth trends, and local market conditions.

The third workflow targets property-level inventory, fetching listings, historical prices, and rental data for the top 25 recommended counties, then computing property-level metrics like cap rate and cash-on-cash return.

Check out his demo on YouTube→

What makes it stand out

The resulting dashboards let Prashant drill from a national view all the way down to individual properties. The system surfaced unexpected opportunities—Indiana, for example, appeared as a strong investment target despite low migration rates, thanks to favorable rent-to-price ratios. The pipeline even compiled landlord-friendliness data by analyzing state and county regulations around rent control, eviction timelines, and tenant protections.

All of this runs on Ascend with DuckDB as the analytics engine, scheduled to refresh inventory data intraday while keeping the slower-changing census data on a lower frequency.

"We built this in two days and it introduced us to investment targets we would never have considered. The system actually tested our assumptions."

— Prashant Appukuttan

Ready to turn investment hunches into data-driven decisions?

Start your free trial of Ascend →

Third Place Winner: Nashe Chibanda

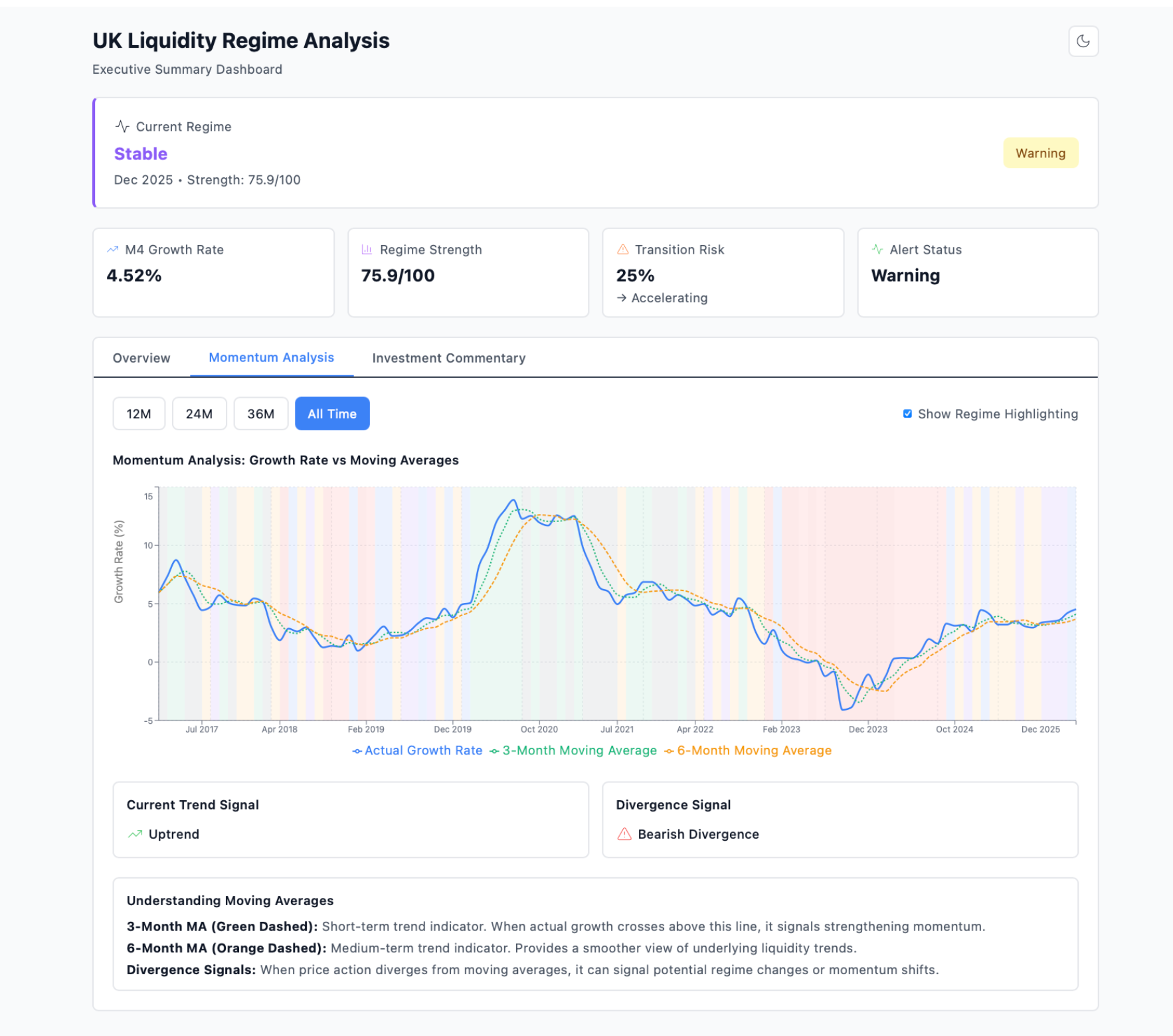

UK Liquidity Regime Analysis

Nashe built an automated pipeline that transforms Bank of England M4 money supply data into plain-English investment narratives. The system translates monetary statistics into context that Limited Partners (LPs) and General Partners (GPs) can actually act on—without needing a background in central bank data.

How it works

The seven-stage pipeline flows from raw data ingestion through growth rate calculations, regime classification, momentum tracking, early warning indicators, narrative generation, and finally automated monthly email reports. The system identifies whether the UK is in an expansionary, contractionary, stable, accelerating, or decelerating liquidity regime, and assigns a regime strength score (0–100).

It also calculates transition risk—the probability of a regime change—and generates specific investment implications for deal activity, valuations, portfolio management, and fundraising. Interactive dashboards built with React and Recharts provide drill-down views of growth trends, momentum analysis, and investment commentary.

What makes it stand out

Nashe is not a data engineer by background—she's worked alongside them on transformation projects. This pipeline represents a breakthrough in what's possible when domain expertise meets agentic AI. By describing the analytical vision in investment terms, AI agents translated it into a production-grade system with DuckDB, SQL transformations, Python API integrations, and automated email delivery.

"Without AI, this analysis would not have been possible. Otto transformed me from an observer of data engineering to a builder of production-grade pipelines."

— Nashe Chibanda

Bonus: Rounding out our Top 10, here are 7 other notable projects

The winning pipelines weren't the only impressive builds. Across the hackathon, participants tackled problems spanning oil and gas operations, urban mobility, media advocacy, telecom infrastructure, environmental monitoring, and city management. Here are seven more prod-ready pipelines, built in a few hours.

Farheen Akbar — Pipeline Predictive Maintenance & Throughput Optimization

Farheen built a predictive analytics pipeline that identifies hydraulic bottlenecks, forecasts equipment failures, optimizes shutdown scheduling, and strategically applies DRA to maximize throughput within existing infrastructure.

The system uses ML-based failure prediction to generate maintenance windows with cost-benefit analysis, processes 1,000+ pipeline predictions with risk scores, and delivers an interactive dashboard showing maintenance events, estimated costs, high-risk pipelines, and DRA consumption.

Otto determined where DRA injection provides the highest ROI, enabling operators to expand pipeline throughput and reduce frictional pressure losses while lowering OPEX.

"This solution provides a scalable, data-driven framework that supports safer, more efficient, and economically optimized pipeline operations."

— Farheen Akbar

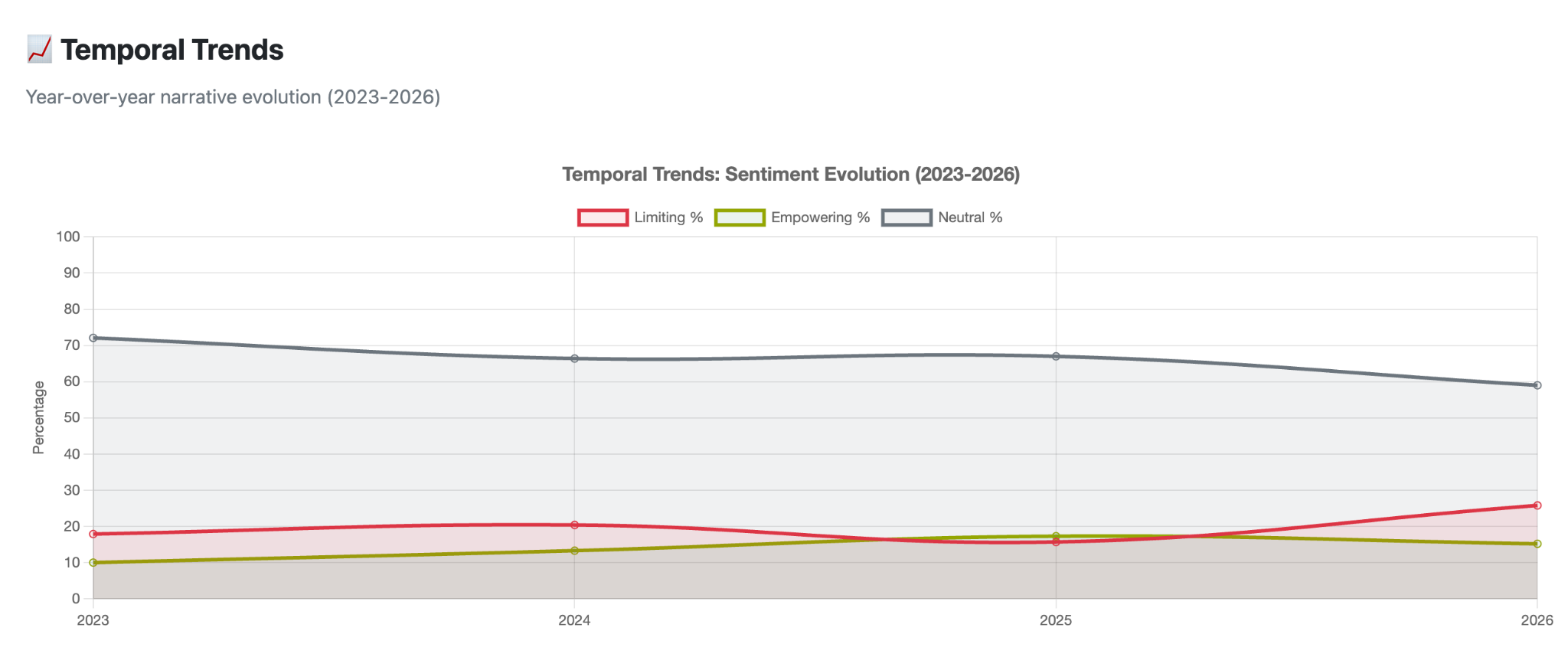

Suha Islaih — Longevity Narratives

Suha built a dual-pipeline system analyzing 11,929 Canadian articles from 2023-2026 with automated weekly monitoring via MediaCloud API. The pipeline uses dual AI sentiment analysis (GPT-based + lexicon-based keyword density scoring with 57% agreement rate capturing different nuances), processes 50-200 new articles weekly, and generates interactive advocacy dashboards with harm assessment, outlet targeting, geographic distribution, and temporal trends.

The system proved advocacy works: 2025 showed a +4% increase in empowering coverage and a 2.2% drop in limiting language. Weekly automated reports enable rapid response to narrative shifts.

Check out her interactive dashboards →

"One-time analysis ≠ strategy: Static reports become outdated; build production system instead."

— Suha Islaih

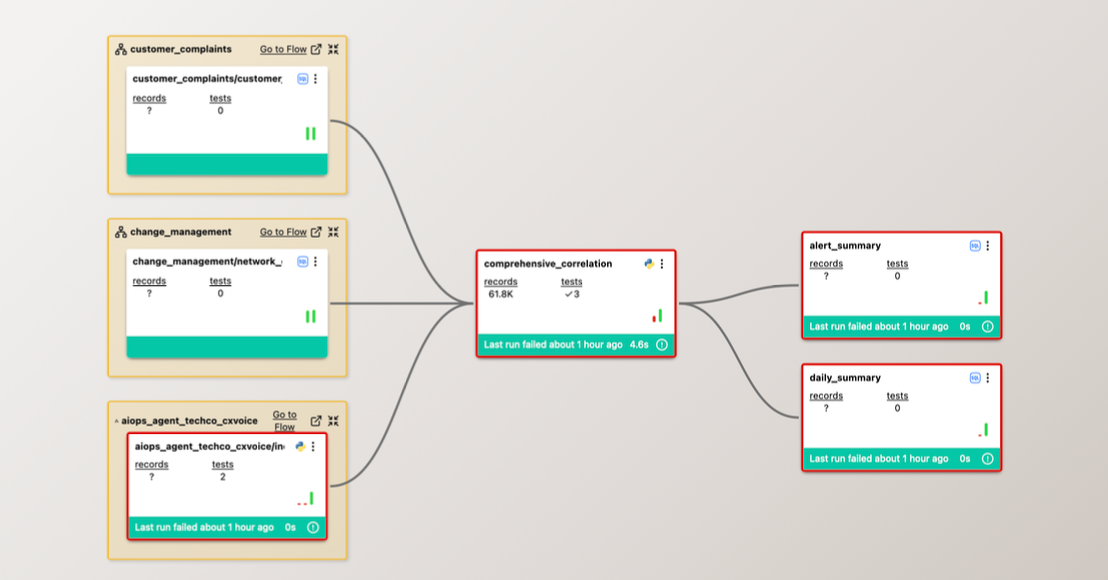

Yogesh Parekh — TechCo Voice Quality Monitoring

Yogesh built an AI-powered correlation engine that automatically connects the dots between VoLTE network incidents, customer complaints, and infrastructure changes. The pipeline processes three critical data streams—real-time call quality metrics (MOS scores, packet loss, jitter), customer support tickets classified by severity, and network change logs tracking configuration updates and maintenance activities.

The system's intelligence layer uses statistical analysis to identify patterns, detecting when incidents spike in a region following a network change or when customer complaints cluster around specific infrastructure events. It runs across a three-stage dependency chain processing 100,000+ call records, generating 10,000+ incident records, and evaluating over 61,000 incident-complaint-change combinations to surface probable causes.

"It was very cool to see how well Otto could take the prompts and build the most suitable data sets, correlations, and precise summaries...Implementing this pipeline seemed like scratching the surface of Ascend's capabilities."

— Yogesh Parekh

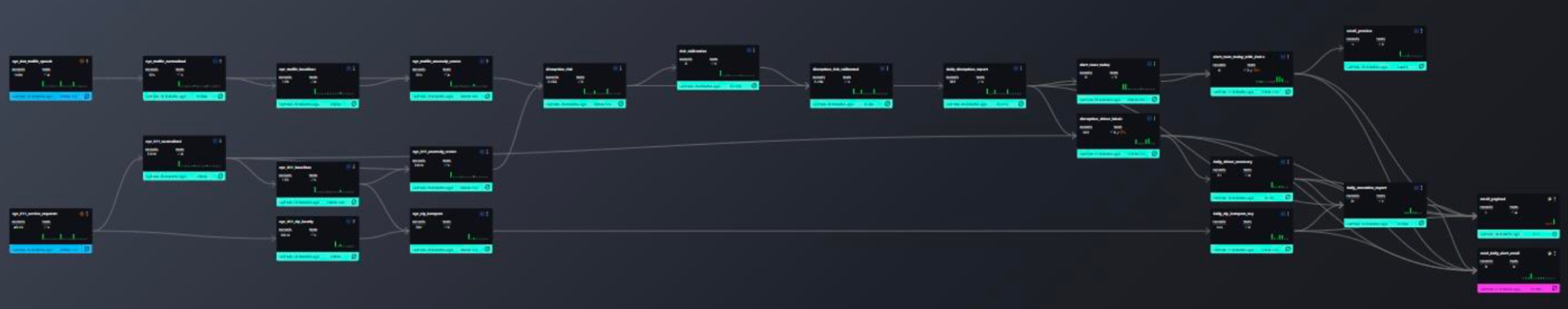

Ameya Chindarkar — NYC Urban Disruption Early Warning System

Ameya built an end-to-end early warning system that detects, explains, and spatially localizes urban disruptions across New York City. The pipeline combines two continuously updated public data sources—NYC 311 service requests (covering power outages, water issues, traffic signal failures, elevator outages, and heating complaints) and NYC DOT real-time traffic speeds—to spot trouble before it snowballs.

The data flows through ingestion and normalization, hourly aggregation, anomaly detection using z-scores against borough-level baselines, and a calibrated risk scoring layer that dynamically flags risky events.

"Building this project with Ascend and Otto showed me how much faster complex data pipelines can be developed with agentic tools. This made it possible to focus more on problem-solving and system design rather than getting stuck on boilerplate or setup work."

— Ameya Chindarkar

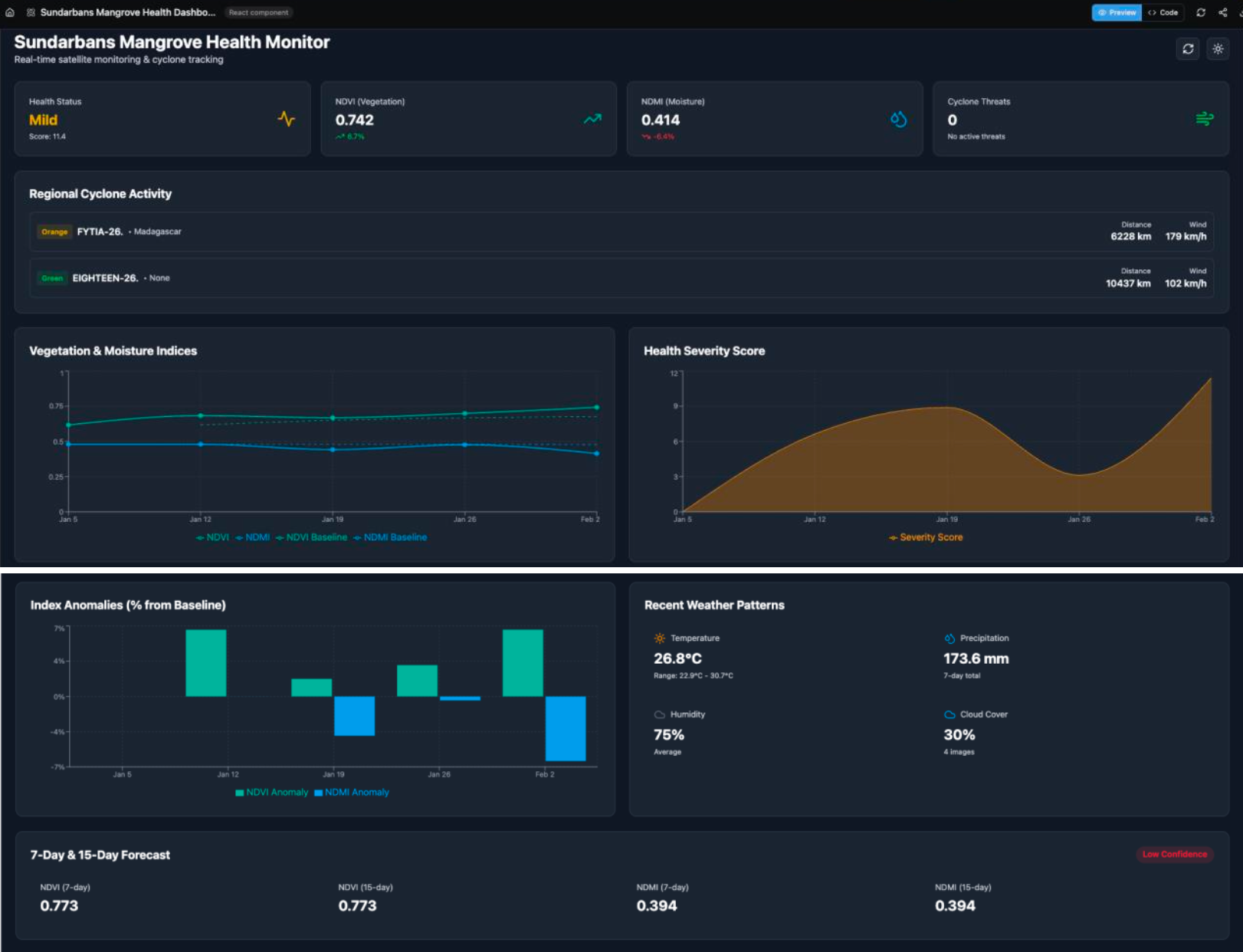

Sabbir Delowar — Sundarbans Mangrove Health Watch

Sabbir built an agentic Earth Observation pipeline that continuously monitors the health of the Sundarbans mangrove system using satellite imagery, weather forecasts, and cyclone tracking data. Rather than a one-off analysis, the pipeline runs on a weekly schedule, evaluates satellite-derived indicators over time, reasons about their significance, and produces decision-ready outputs with minimal human intervention.

The outputs include structured weekly summaries, trend and anomaly indicators, weather-informed short-term outlooks, cyclone awareness notes, and a continuously updating dashboard—all designed to be understandable by non-technical stakeholders, not just EO specialists. The approach is highly transferable and could be applied to forests, wetlands, agricultural monitoring, or coastal systems.

“By focusing on persistence, context, and uncertainty, the pipeline avoids false alarms while still surfacing early warning signals.” - Sabbir Delowar

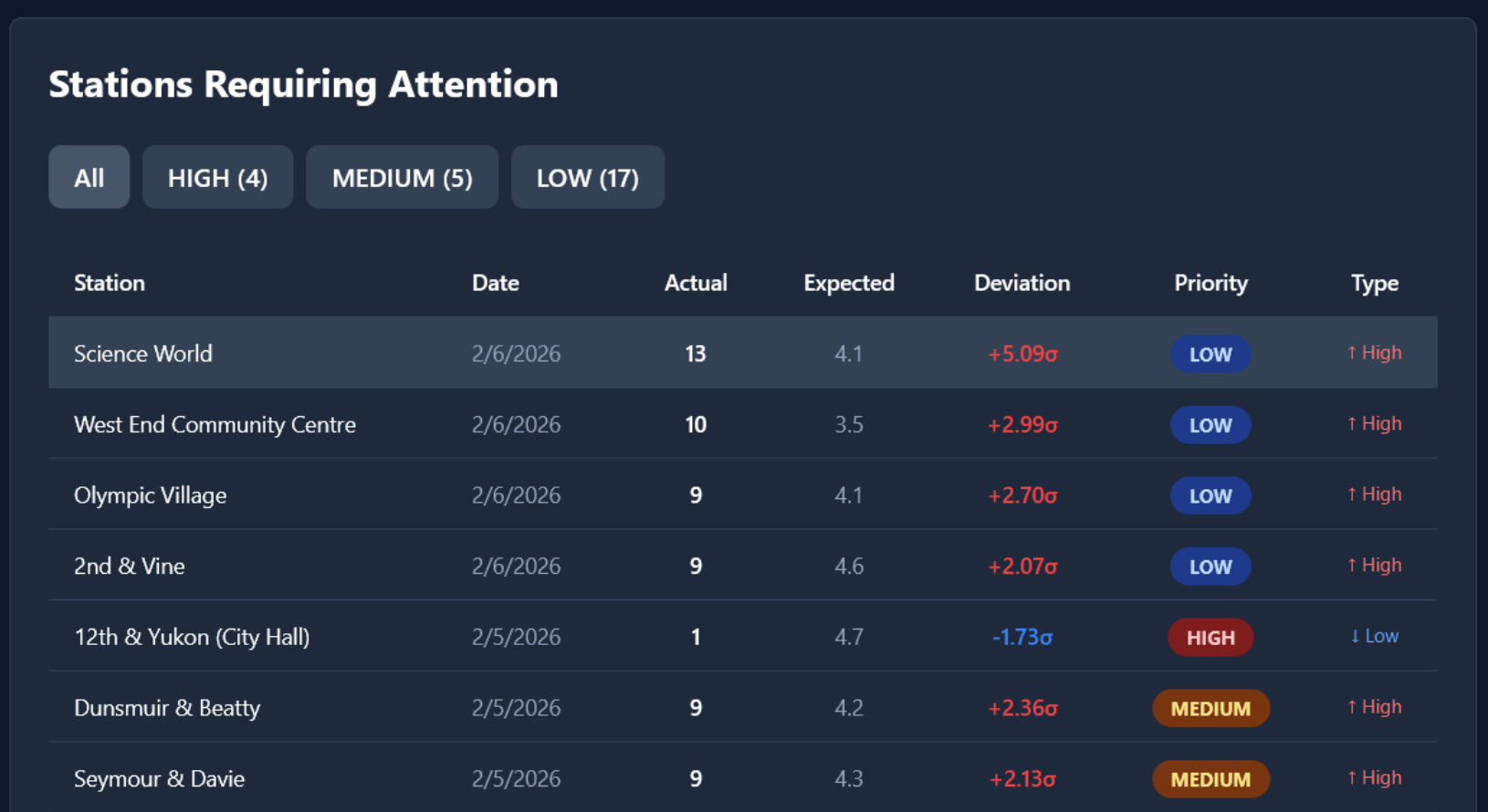

Julia Schmid — Bike-Share Operations Monitoring

Julia built an automated anomaly detection pipeline that combines synthetic Vancouver Mobi bike-sharing data with real-time weather data from Open-Meteo API. The system computes 21-day rolling baselines per station and day type, uses weather-aware deviation detection with adjusted tolerance thresholds (stricter for good weather, more lenient for severe conditions), and generates daily operational alerts at 6 AM with plain-language explanations.

A React dashboard summarizes system health and ranks stations requiring attention, designed specifically for non-technical operations users. Otto created custom rules, automated analysis triggers, and intelligent alerting that transforms raw anomaly data into operational intelligence.

"Agentic platforms shift effort toward methodology and insight. Using Ascend significantly reduced the time spent on coding and debugging."

— Julia Schmid

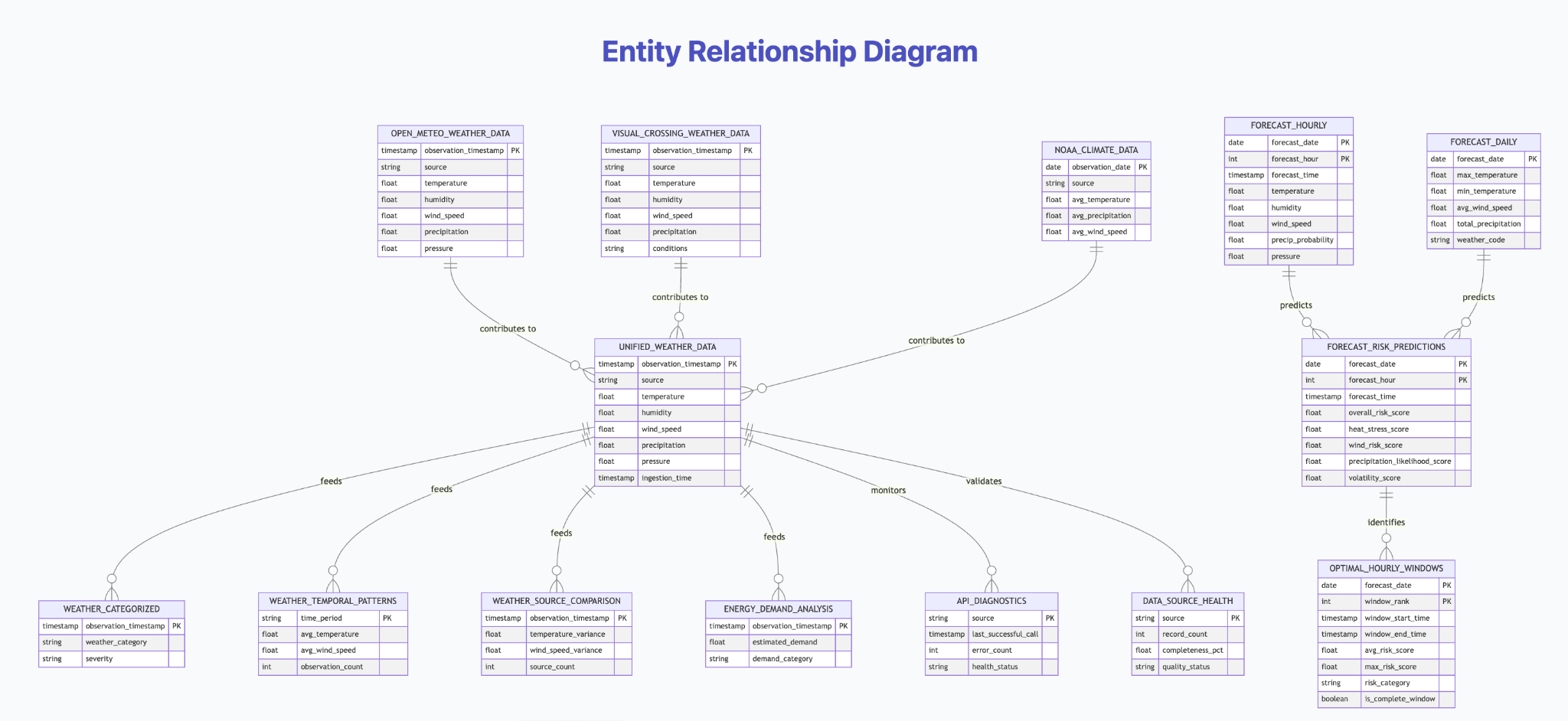

Saumya Singh Jaiswal — Weather Risk Analysis Pipeline

Saumya built a production-grade pipeline that combines three authoritative weather data sources (Open-Meteo, Visual Crossing, NOAA) to identify optimal 5-hour operation windows using a proprietary multi-factor risk scoring algorithm. The system processes runs daily at 2 AM UTC, and identifies optimal windows across a 7-day forecast with the best window.

“This project proves that agentic data engineering is not just a concept—it's a practical, powerful approach to building sophisticated data pipelines through natural language interactions.”

- Saumya Singh Jaiswal

Top takeaways from these standout projects

Across industries—insurance regulation, real estate investment, private markets finance, oil and gas operations, urban mobility, media advocacy, telecom infrastructure, city management, and environmental monitoring—a few patterns emerge from this year's hackathon winners.

Domain expertise drives the value.

None of these projects started with a technology question. They started with a real business pain point: regulatory risk, investment prospecting, portfolio context, pipeline efficiency, operational monitoring, narrative measurement, infrastructure correlation, urban disruption, and environmental stress. Ascend and Otto handled the data engineering complexity; the builders focused on what the system should accomplish and why it matters.

Production-ready from the start.

These pipelines are deployed and scheduled—running daily or intraday, writing to production data stores, and delivering automated outputs via email or dashboards with data quality tests built in. In a few hours, builders created entire data products.

Iteration is the process.

Every winner described a collaborative back-and-forth with Otto—refining prompts, adjusting parameters, validating outputs, and building incrementally. The most successful approach was collaborating with an agent to design, build, and monitor the pipelines.

Build What's Next

Our hackathon participants went from idea to deployed pipeline in one week. What could you build with Ascend?

→ Automate compliance monitoring like Bernadine

→ Build investment analytics like Prashant

→ Create market intelligence like Nashe

→ Optimize infrastructure like Farheen

→ Monitor operations like Julia

→ Measure advocacy impact like Suha

→ Correlate systems like Yogesh

→ Detect disruptions like Ameya

→ Track environmental health like Sabbir

Start your free trial at ascend.io and see what's possible when domain expertise meets agentic data engineering.

.webp)

.webp)

.webp)

.webp)

.avif)