Reverse ETL platform

Seamlessly Share and Activate Data

Place data products at the center of your business.

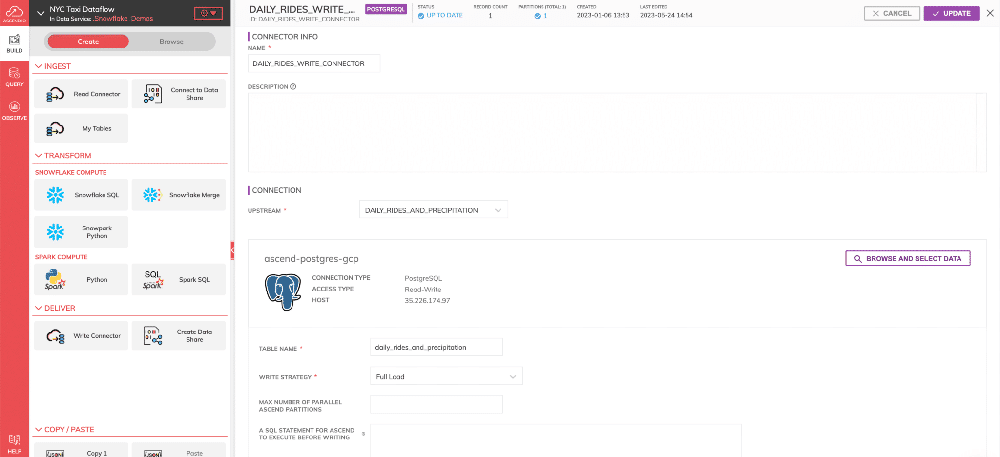

Activate With a Single Click

Put clean data back into operational systems.

Leverage output connectors back into the databases that run your business

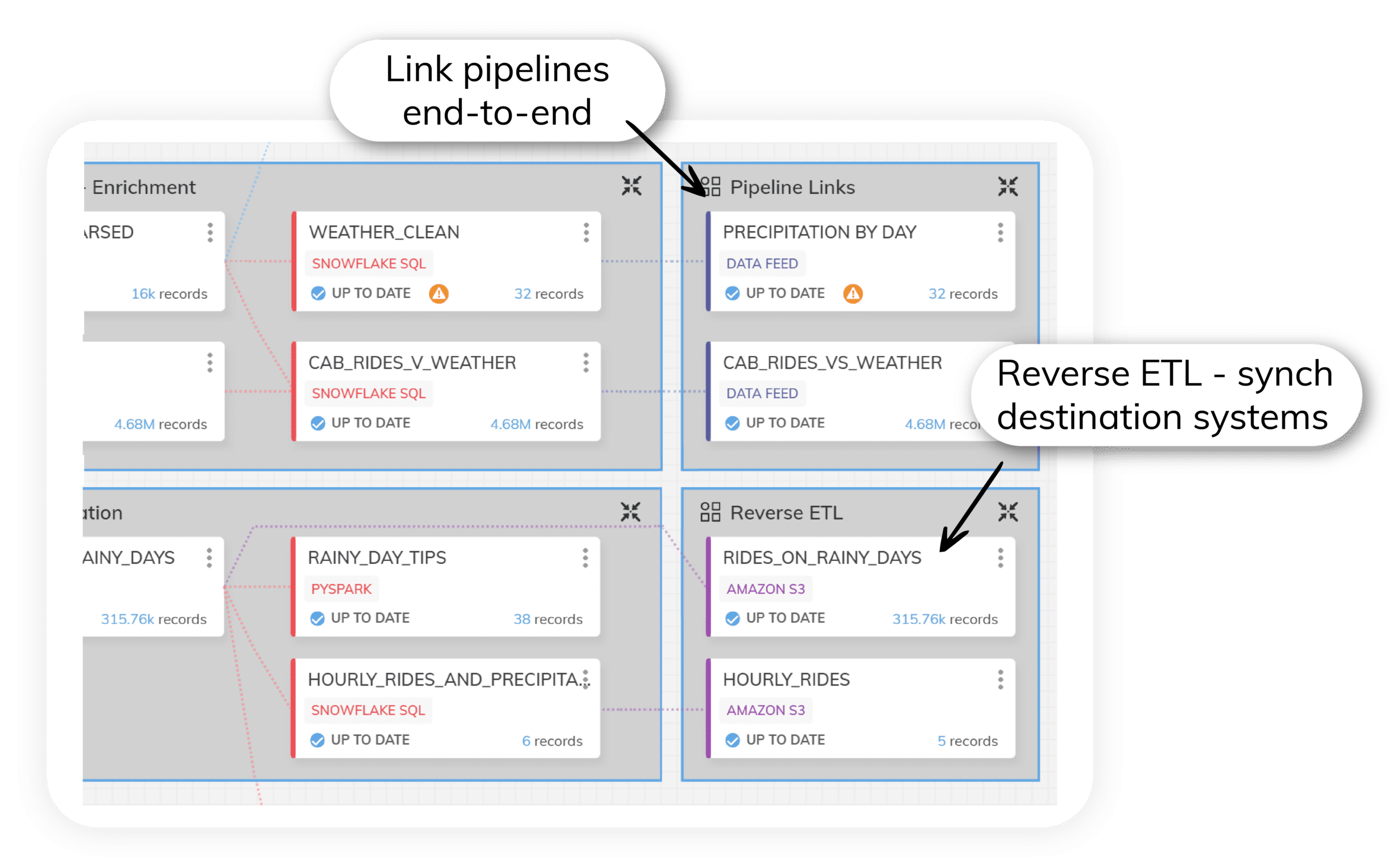

Build on previous success.

Subscribe to datasets generated in other pipelines and pick up where they end

Leverage powerful automation that propagates changes from those pipelines into yours

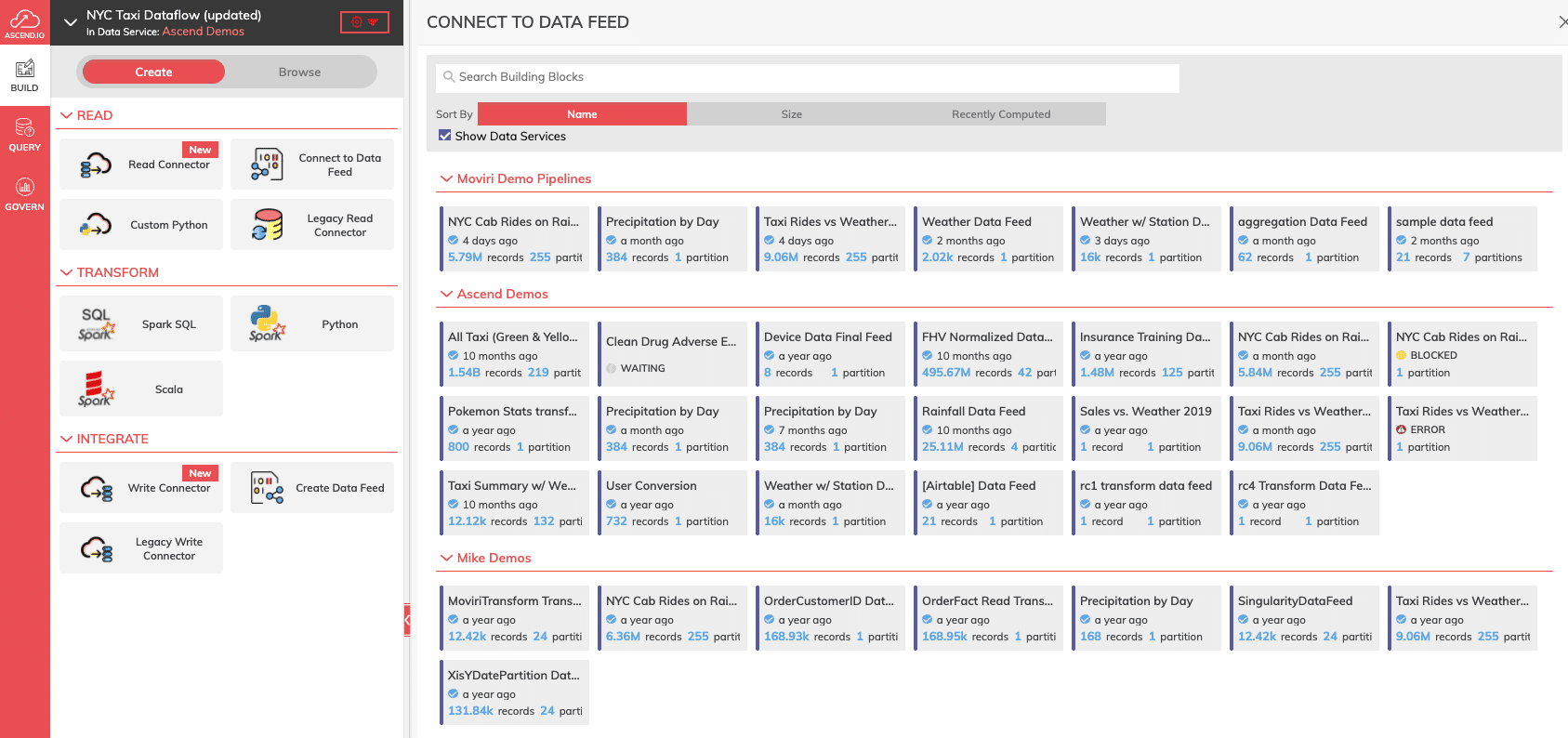

Don't recreate the wheel.

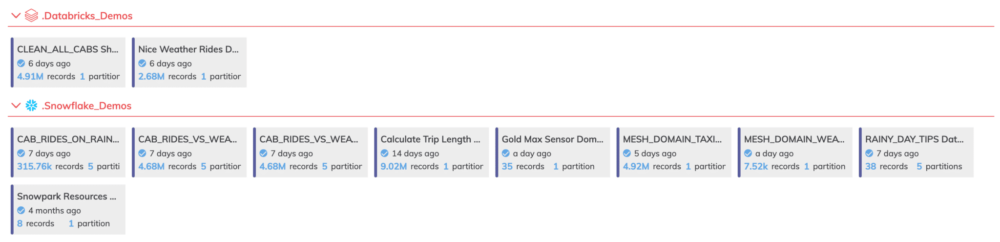

Eliminate dataset duplication with a built-in cataloging system

Find and subscribe to datasets easily

Make a mesh of it.

Connect datasets across domains and even clouds

Subscribe to a data product in Databricks and automatically pull it into Snowflake (and vice versa)

From Our Customers

Get Started for Free

Play around with it first. Pay and add your team later.

Docs

Dive deeper into Ascend with our comprehensive developer docs. Explore now to level up your skills.

Release Notes

Check our release notes to explore new features and enhancements. Learn what's new today!

The DataAware Podcast

Join us as we explore trends, best practices, and real-world use cases. Stay informed, stay DataAware!