Model Context Protocol (MCP) servers are changing how data engineering teams automate pipeline operations. Instead of building custom integrations for every tool in your stack, MCP provides a standardized way for AI agents to interact with tools like Slack, GitHub, PagerDuty, and dozens of other platforms. The result? Intelligent agents that don't just monitor your pipelines—they take action on your behalf.

What are MCP servers?

Model Context Protocol servers represent a fundamental shift in AI-tool integration. Introduced by Anthropic in November 2024, MCP has rapidly gained adoption with over 1,000 community-built servers deployed by early 2025 and integration by major platforms including OpenAI, Microsoft, and Google DeepMind.

The core problem MCP solves: Before MCP, every AI application needed custom integrations with every external system. If you had 5 AI tools and 10 data sources, you potentially needed 50 different integration points. This M×N complexity was expensive to build and impossible to maintain at scale.

MCP's solution: A standardized protocol that transforms the M×N problem into M+N. Instead of custom integrations everywhere, each system implements the MCP standard once, and all compatible AI agents can interact with it immediately.

MCP servers and the agentic execution loop

MCP servers enable powerful agentic workflows by giving AI agents standardized access to external systems as tools. Rather than simple reactive scripts that trigger when something goes wrong, MCP servers enable agents to execute sophisticated workflows that mirror how experienced engineers actually troubleshoot and resolve issues.

The MCP architecture: Tools, Resources, and Prompts

The official MCP specification defines three core primitives that enable intelligent automation: Tools, Resources, and Prompts. Understanding how these work together explains why MCP has seen such rapid industry adoption.

Tools are where we're seeing the most activity in the industry today. These enable agents to take actions in external systems—creating GitHub issues, sending Slack messages, triggering PagerDuty incidents, or modifying configurations. Tools are the visible outputs that teams immediately recognize as valuable automation. Most MCP implementations start here because the benefits are obvious: instead of manually creating tickets when pipelines fail, agents do it automatically.

Resources are proving crucial for the intelligence behind those actions. While tools get the attention, resources represent the data connected to those tools that agents can access for context. When you connect to GitHub via MCP, you get both Tools (create issues, PRs) and Resources (git history, repository files, documentation). A database MCP server provides Tools (execute queries) and Resources (schema information, table data, logs). This contextual data is what transforms simple automation into intelligent workflows—agents can understand why something happened by accessing the data sources connected to your existing tools, not just that it happened.

Prompts (or system instructions) are the often-overlooked component that drives rich, contextual actions. While the industry focuses on connecting tools, the quality of automation can depend heavily on the instructions that guide agent behavior. Well-crafted prompts enable agents to analyze complex situations, correlate data across systems, and take nuanced actions based on context rather than simple rules.

What we're seeing in practice is that many of the most successful MCP implementations combine all three: agents use Resources to gather comprehensive context, apply Prompts to analyze that context intelligently, then execute appropriate actions through Tools. The result is automation that feels less like scripted responses and more like having an experienced engineer monitoring your systems.

Extending Agentic Data Engineering via MCP servers

Ascend's Agentic Data Engineering platform demonstrates how MCP servers can extend and amplify intelligent automation, enabling teams to customize their agentic workflows.

At the core of Ascend's architecture, the system captures comprehensive metadata from every aspect of your data pipeline operations—schema changes, performance metrics, data quality test results, lineage information, execution history, resource utilization, user interactions, and more. This metadata forms the foundation for intelligent decision-making, but the real power comes from how it's processed and acted upon.

Ascend's built-in agents can already take powerful actions within the platform—editing files, triggering pipeline runs, and optimizing execution plans based on observed patterns. MCP servers extend this intelligence beyond platform boundaries. While Ascend's agents excel at internal data pipeline operations, MCP integration enables them to leverage the same rich context and analytical capabilities for actions across your entire data toolchain. The platform's understanding of pipeline state, data quality patterns, and operational context now drives actions in GitHub, Slack, PagerDuty, and dozens of other external systems.

This creates a powerful multiplier effect. Instead of building separate automation for external tools, Ascend's existing intelligence can now orchestrate sophisticated workflows across your complete infrastructure stack. The result is cohesive automation that understands both your data systems and your operational processes, enabling truly intelligent responses to complex scenarios.

Practical implementation: automated triggers and extensible actions

Ascend's event-driven automation engine demonstrates how this intelligence extension works in practice. The platform monitors its rich metadata stream in real-time. These events automatically trigger embedded agents with full context about what changed, why it matters, and what systems might be affected.

Unlike simple alerting systems that just notify when thresholds are crossed, Ascend's triggers provide agents with comprehensive situational awareness. When a pipeline fails, agents don't just know "failure occurred"—they understand the failure mode, recent changes that might be related, downstream dependencies that are impacted, and historical patterns for similar issues.

With MCP integration, this rich context now drives actions across your entire toolchain. Agents can gather additional context from external monitoring tools (Resources), apply sophisticated analysis based on both internal intelligence and external data (Prompts), then execute coordinated responses through multiple systems (Tools). A single pipeline issue might trigger internal remediation attempts, external ticket creation, team notifications, and stakeholder updates—all orchestrated by the same intelligence that understands your data operations.

READ MORE: Introducing Intelligent DataOps Agents

How to configure agentic MCP servers

Engineers can give agents access to MCP servers to act on their behalf. Within platforms like Ascend, users can configure these in a clean YAML file that provides embedded agents with the same tools their teams use.

This configuration gives your agents the ability to post Slack messages, create GitHub issues and pull requests, and trigger PagerDuty incidents—all through standardized MCP interfaces.

Once agents have tool access, the next step is to provide them with prompts to effectively take action based on the resources they can access. Here are a few example prompts data teams can leverage with their MCP servers.

Use case 1: Intelligent pipeline failure response

When a pipeline fails, instead of just sending a generic alert, an agent with GitHub MCP access can analyze the failure and automatically create detailed issues:

The agent can examine error logs, correlate with recent code changes, and create issues like:

"Pipeline failure in daily_user_metrics after schema change"

Pipeline failed at 2:30 AM during the transform step. Error suggests column 'user_segment' is missing from the source table.

Recent commit by @jane.doe (2 hours ago) modified the user segmentation logic in transforms/user_metrics.sql

Suggested fix: Verify the source table schema matches the expected columns in the transform.

Error log:

ColumnNotFoundError: Column 'user_segment' not found in table users_raw

Use case 2: Daily team notifications via Slack

Configure an agent to run after each pipeline completion and send intelligent summaries to your team:

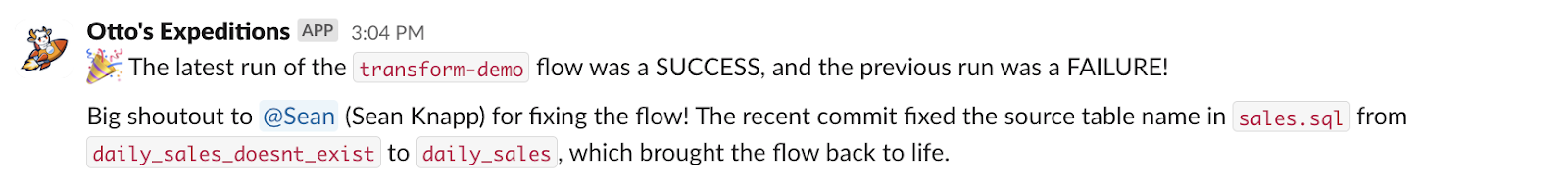

This produces Slack messages like:

Use case 3: Managing SLAs & Alerting

When critical data SLAs are at risk, agents can automatically escalate through PagerDuty with full business context:

The benefits: measurable automation

Teams using agentic MCP servers report significant operational improvements:

Faster incident response: Issues are created and assigned within minutes of pipeline failures, not hours.

Reduced alert fatigue: Instead of generic failure notifications, teams get intelligent summaries with context and suggested actions.

Better team communication: Automated daily summaries and change notifications keep everyone informed without manual status updates.

Proactive issue resolution: Many pipeline issues are identified and documented before they impact downstream systems or users.

Try it yourself

Ready to add intelligent automation to your data pipelines? Start with this basic Slack notification setup:

- Configure your first MCP server (Slack) using the YAML example above

- Create a simple agent prompt that posts pipeline status to a test channel

- Trigger the agent after your next pipeline run

- Expand gradually by adding GitHub for issue creation, then PagerDuty for escalation

The key is starting simple and building confidence in your agent's decision-making before giving it access to more powerful actions.

Your data pipelines don't have to be manually managed. With MCP servers, your agents can handle the routine work while you focus on building better data products. The future of data engineering is agentic—and it's available today.

.webp)

.webp)

.webp)

.webp)

.avif)