I spend a lot of time talking with data engineering teams, and the same pain points come up in every conversation: pipelines fail at the worst possible moments, incident response eats up way too much time, and every team has unique operational needs that no generic tool can anticipate.

Most teams end up building custom scripts, setting up basic alerting, and hoping for the best. But there's always been this gap between what generic automation can do and what data teams actually need—automation that understands their specific pipelines, business context, and operational procedures.

Today, I'm excited to share how we're closing that gap with Custom Agents, a new capability that lets data teams build intelligent, context-aware automation in minutes rather than months.

The Problem: Automation that doesn't understand context

Data pipeline automation today feels like it's stuck in the past. When something breaks, you might get an alert, but then you're back to manual work: digging through logs, understanding downstream impact, coordinating fixes across teams, updating stakeholders.

The fundamental issue is that traditional automation operates in isolation from your data platform. It can't answer questions like "What changed in the recent deployment that might have caused this?" or "Which business units will be affected if this pipeline stays down?"

Even when teams invest weeks building custom automation, these tools lack the context to make intelligent decisions. They're essentially fancy if-then statements that execute the same response regardless of the situation.

A Different Approach: AI that sees what you see

At Ascend, we've been working on something we call Agentic Data Engineering—the idea that AI agents should be full participants in data operations, not just code assistants bolted onto existing tools.

Our AI assistant Otto already helps teams with routine tasks like generating documentation, suggesting code improvements, and monitoring pipeline health. But we kept hearing from teams: "This is great, but we have unique operational needs that go beyond what any built-in system can handle."

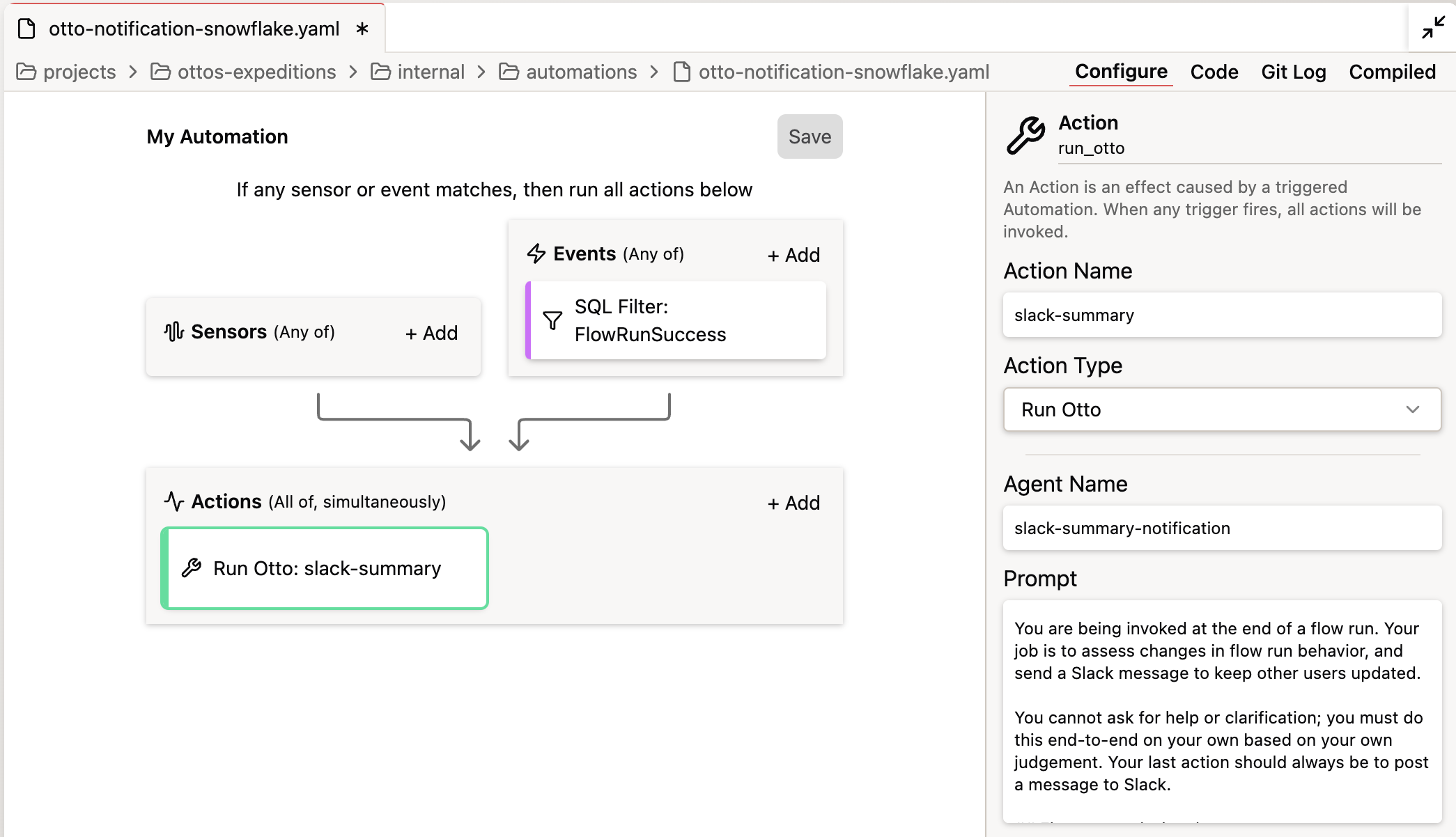

That's where Custom Agents come in. They're specialized versions of Otto that you can build for specific use cases, configured with your organization's context and connected to your existing tools.

SEE IT IN ACTION: Check out Agentic Data Engineering

What makes custom agents different

The key insight is that effective automation requires the same context that human engineers rely on. When an experienced data engineer investigates a pipeline failure, they don't just look at error logs—they consider recent code changes, understand downstream dependencies, know which teams to notify, and can recognize patterns from similar past incidents.

Custom Agents work the same way. They have access to comprehensive metadata about your data pipelines: execution history, dependency relationships, recent changes, performance patterns, and business context. This unified view enables them to make informed decisions rather than executing rigid scripts.

Take the example of a pipeline failure. Here's how it works in practice—when a production pipeline fails, a Custom Agent can automatically:

- Analyze the failure using execution logs and metadata

- Compare against similar historical incidents to identify likely causes

- Assess downstream impact based on data lineage

- Create detailed incident reports with suggested remediation steps

- Notify the right teams through your existing communication channels

- Track the incident through to resolution

All of this happens automatically, with the same level of analysis you'd expect from an experienced engineer who knows your systems inside and out.

Building intelligence that understands your business

What sets Custom Agents apart from traditional automation is how easy they are to build and customize. Instead of weeks of development, you define agent behavior using simple markdown files with natural language instructions.

Here's what a "Production Incident Agent" looks like:

This agent understands your team's specific escalation procedures, knows which stakeholders to notify for different types of failures, and can automatically create tickets in your project management system with the right priority and context.

Or you might build a "Code Review Assistant" that enforces your team's standards:

The agents connect to your existing tools—Slack, GitHub, PagerDuty, Jira—through standard integrations, so they enhance your current workflows rather than requiring you to change tools.

Beyond Incident Response: Proactive operations

While incident response gets a lot of attention, Custom Agents excel at proactive operations too. Teams are building agents that:

- Enforce Governance: Automatically review code changes for compliance with security policies and coding standards

- Optimize Performance: Monitor resource usage patterns and suggest optimizations based on cost and performance targets

- Streamline Communication: Generate executive summaries of pipeline health and automatically distribute reports to stakeholders

The common thread is that these agents understand your specific business context, operational procedures, and team dynamics.

Making advanced AI accessible

One of our core beliefs is that powerful AI capabilities shouldn't be limited to teams with extensive machine learning expertise. Custom Agents democratize sophisticated automation by providing a simple interface backed by advanced AI.

As our CEO Sean Knapp puts it: "Building powerful and safe AI agents shouldn't take weeks. Teams should be able to build, test, and deploy sophisticated automation safely in just a couple of minutes."

This accessibility is crucial because every data team has unique needs. The specific failure patterns you see, the stakeholders you need to coordinate with, the business logic that drives your decisions—these details matter, and they're different for every organization.

The broader shift toward Agentic Data Engineering

Custom Agents represent part of a broader shift we're seeing in data operations. Instead of expecting humans to manually manage every aspect of pipeline operations, we're moving toward systems where intelligent agents handle routine tasks and escalate when human expertise is truly needed.

This isn't about replacing data engineers—it's about amplifying their capabilities so they can focus on strategic work rather than operational toil. The best data engineers spend their time solving complex business problems, not babysitting pipelines or manually coordinating incident response.

What's next

We're excited to see what teams build with Custom Agents. Every data engineering team has unique operational challenges, and we expect to learn a lot about use cases we haven't even considered yet.

The early feedback has been encouraging. Teams report faster incident response times. But most importantly, engineers are spending more time on the creative, strategic work that makes a real business impact.

.webp)

.webp)

.webp)

.webp)

.avif)