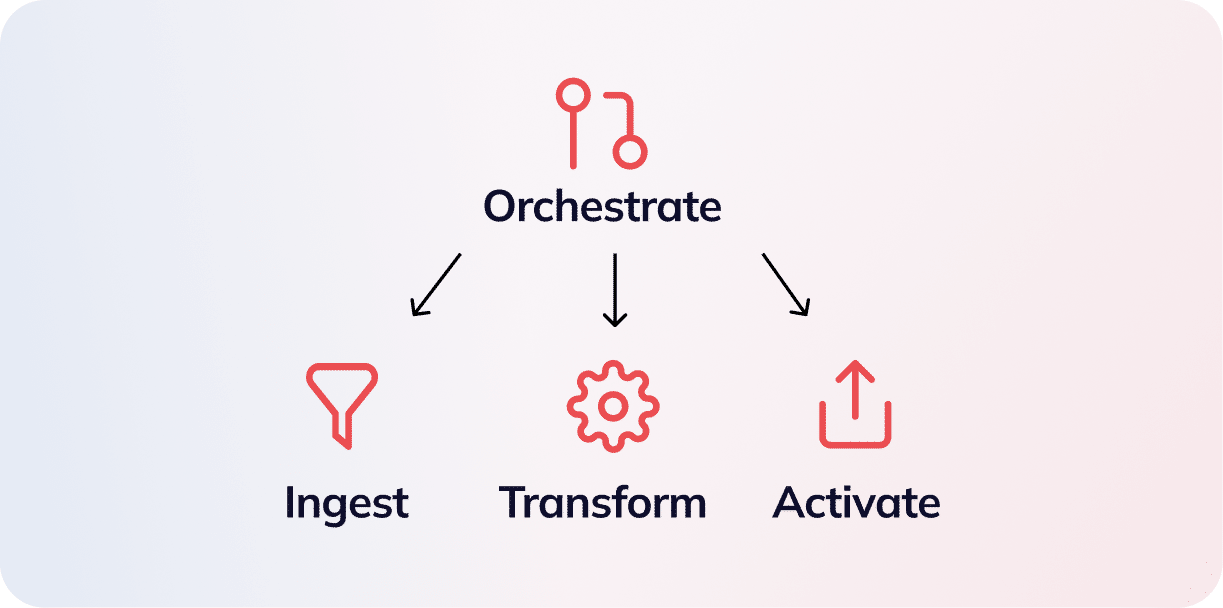

Build your workflows with the right tools for the job.

Deploy automation and AI to build your data workflows on a unified data engineering platform

Data Ingestion

- Accesses a library of ready to use connectors.

- Setup standard batch loading intervals or code your own.

- Ingest data incrementally.

- Eliminate the need for full reloads from the source.

- Leverage a full-featured Python framework to write and deploy your own API connectors on the fly.

- Apply data quality rules during loading.

Data Transformation

- Develop via the command line, see results in the visual DAG.

- Code just your transformation logic, leave the rest to automation.

- Mix and match SQL and Python in the same pipeline.

- Use native Snowflake, Databricks, and BigQuery functions.

- See your entire pipeline in one view.

- Make updates without hopping between tools.

Data Orchestration

- Go beyond orchestration with DataAware Automation.

- Enjoy seamless propagation of changes across entire networks of pipelines.

- Detect and debug pipeline issues in realtime as changes are propagated.

- Cut the amount of wasteful data processing by up to 63%.

- Never reprocess data that is already up to date.

Data Activation

- Put clean data back into operational systems with reverse ETL.

- Subscribe to datasets generated in other pipelines and pick up where they end.

- Eliminate dataset duplication with a built-in cataloging system.

- Connect datasets across domains and even clouds.

Data Quality

- Access a library of common data quality tests.

- Build and reuse custom data quality checks.

- Apply data quality rules during loading.

- Differentiate between data quality errors and everything else to raise smart alerts.

Data Observability

- Get end-to-end observability on your pipelines with unprecedented levels of metadata.

- Trace all the data in your system to its source.

- Drill down to the code level on high consumption areas.

- Recieve real-time insights into the health and performance of your pipelines.