Ascend.io + Qubole

With 95% less code required and a significant acceleration of build time, learn why Qubole customers love Ascend.io

The Power of Partnership

A simple, secure and open data lake platform to accelerate machine learning, streaming and ad hoc analytics, on data lakes.

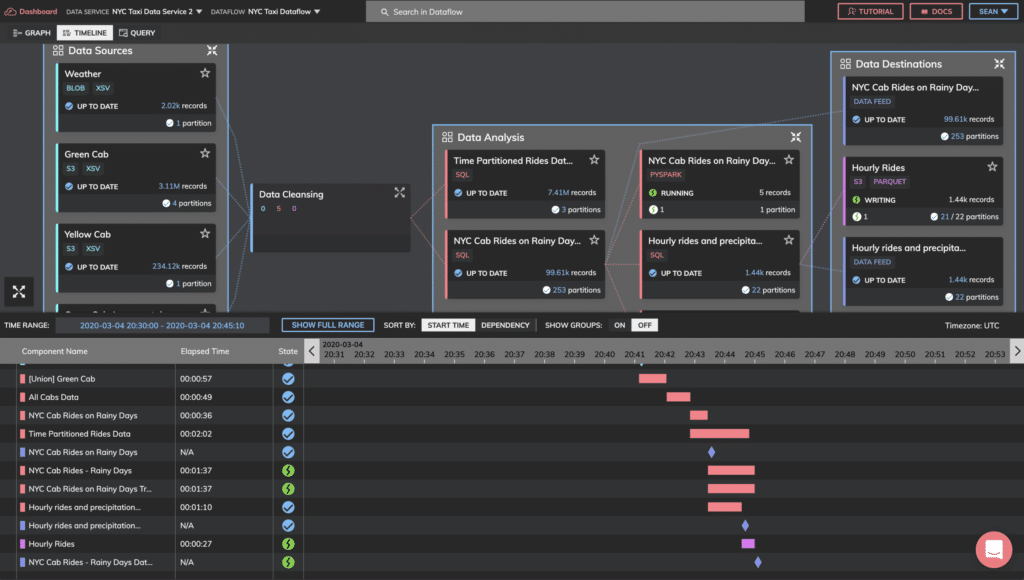

A DataAware engineering platform for autonomous, self-optimizing data pipelines that unify your lakes, warehouses, streams, and more.

The partnership between Ascend.io and Qubole will serve as a game changer for customers as they look to unlock their next level of data maturity. Customers will receive an end-to-end solution to simplify data engineering and reduce the time it takes to extract valuable business insights from real-time, large-scale analytics. The joint solution’s power and simplicity is impressive.

Not only does it put time back into the hands of data teams who are under constant pressure to do more with data, but it presents a combination of extensive customization and configurability with easy-to-use, visual, low-code interfaces. It truly offers the best of both worlds regardless of an organization’s data maturity.Mike Leone, Senior Analyst at Enterprise Strategy Group

The Benefits of Qubole + Ascend.io

Self-Service Data Pipelines

Ascend for Qubole raises team productivity of data engineers, data scientists, and data analysts with self-service data pipelines by replacing the complexity of data engineering with low-code, declarative configurations.

Reduced Costs

Ascend continually optimizes Spark usage by only processing incremental data and code. In concert, Qubole’s Heterogeneous Cluster Lifecycle and Intelligent Spot Management provide the most cost-effective combination of on-demand and spot VMs (virtual machines) to further minimize the cost of data processing.

Increased Performance

The optimized open source frameworks of Qubole, such as Presto, Spark, and Hive,, allow users to process and query data with industry-leading response times, all without changing their normal workflow or manually tuning their Ascend-based pipelines.

Native Connectors

Qubole’s native connectors allow users to query unstructured or semi-structured data on any data lake regardless of the storage file format – CSV, JSON, AVRO, or Parquet. Meanwhile, Ascend’s extensive connector framework is capable of ingesting data from industry leading databases, warehouses, APIs, and more.

Built In Governance

Qubole’s advanced financial governance capabilities provide immediate visibility into platform usage and budget allocation, chargeback, monitoring, and control of cloud spend. Meanwhile, Ascend’s DataAware intelligence governs data lineage for all pipelines with full history linking data and code, and allocates costs to pipeline operations so data teams know exactly where to tune their code.