The Autonomous Data Pipeline Engine

Powered by Ascend’s DataAware™ intelligence, the Autonomous Pipeline Engine converts your data goals into self-optimizing pipelines.

The First Data Engineering Platform That Understands Your Data

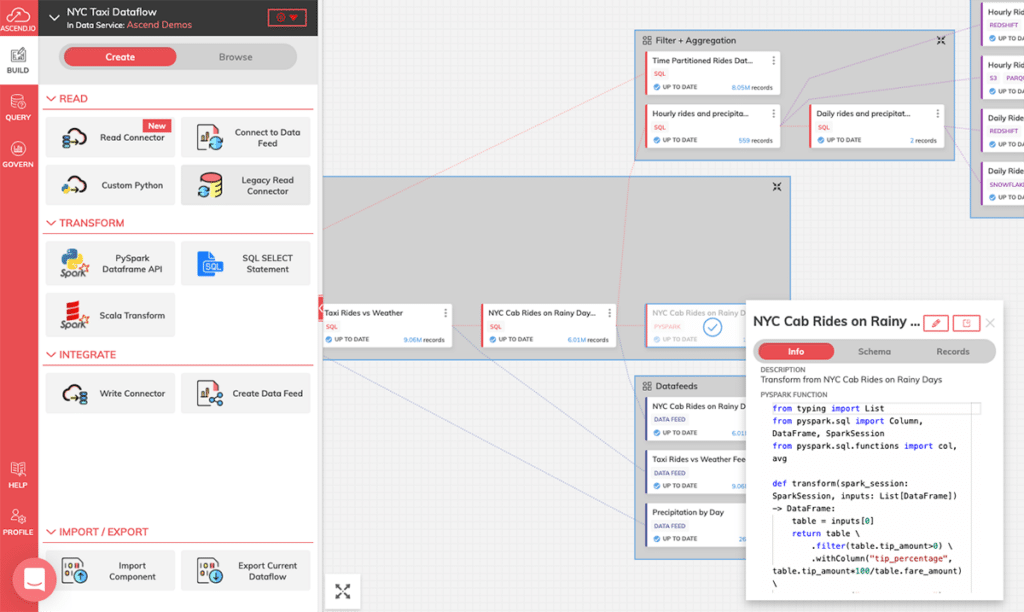

Under the hood of the Autonomous Pipeline Engine

Data Aware: know about all our data, for all time, including its profile, where it came from, how it got there, where it went to, how it was used, and why

Autonomous: continuously watch for changes to data and code, dynamically generating tasks and parameters to ensure the right data arrives, always

Scalable: able to track trillions of records, billions of data partitions, and react to changes within seconds

Self Optimizing: leverage data collected to drive greater efficiencies than can reasonably be done manually, such as avoiding duplicate and unnecessary computations

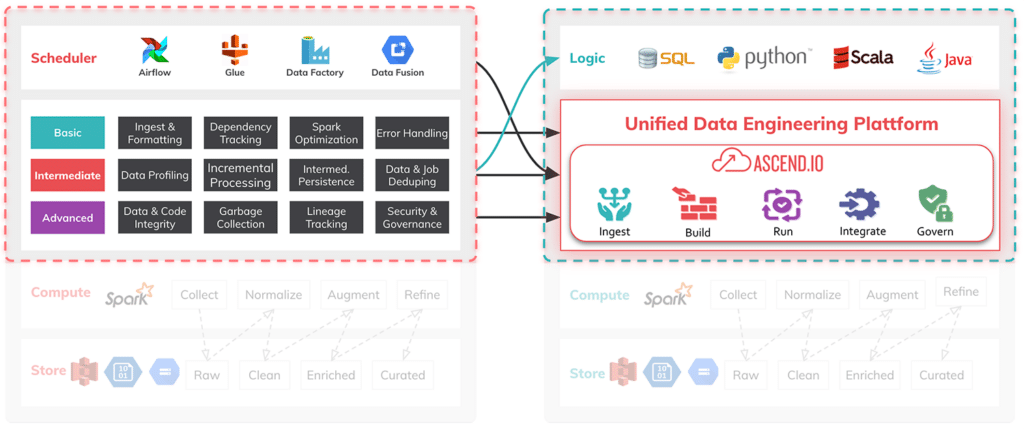

A Primer on Frameworks vs Control Systems

The status quo for building data pipelines is painful and cumbersome. Sure, building the first couple isn’t too bad…it’s the many that follow. And how they impact each other. And how they become harder and harder to maintain as the dependencies grow and the codebase becomes increasingly unruly. For us, this was a terminal path for our happiness as engineers.

To solve this, there are two options: Frameworks and Control Systems. Most other companies went the framework (or imperative)route. They are great at making it easier to build at a static point-in-time. But they lack a feedback loop, which is critical for dynamic systems.

Dynamic systems, like data pipelines, are where control planes (or the declarative approach) shine. Instead of the “when X happens, do Y” framework mentality, a control plane takes the approach of “no matter what happens, make the system look like Z.” As engineers, we wanted the latter. So we built the Dataflow Control Plane.

In architecting this, we solved for three key areas:

User-defined "blueprints of pipelines"

Translating blueprints into jobs and infrastructure

Persisting bidirectional feedback to always make it happen

Imperative

Declarative

View the On Demand Tech Talk →

Learn more about intelligent orchestration as we explore how applying the declarative approach to data pipelines results in 95% less code, 10x faster build times, automated maintenance, and more efficient data pipelines.

Download the Whitepaper →

Learn more about data pipeline orchestration techniques in this whitepaper where we compare and contrast both approaches to pipeline orchestration and the impact on data engineering.

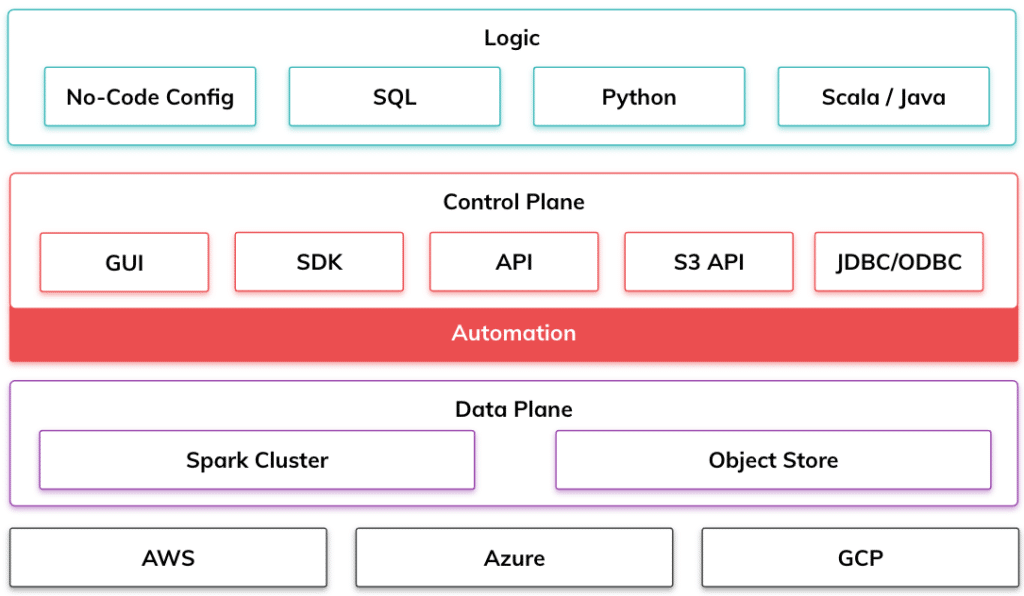

Unifying Data Engineering Architecture with Flex Code

The Ascend Unified Data Engineering Platform delivers a pluggable architecture that enables different teams, with different skill sets, to build modular and interchangeable pieces while using the languages and tools they are already familiar with.