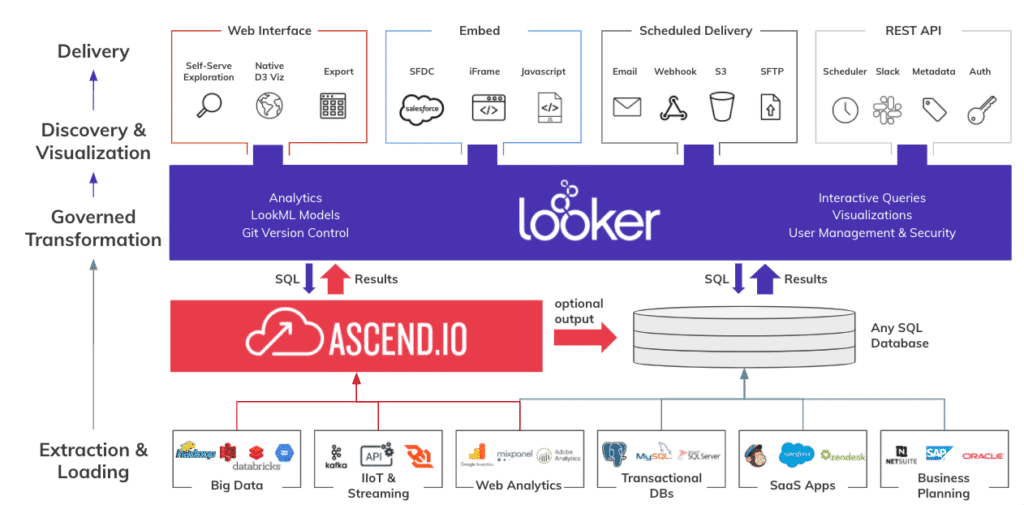

Ascend.io + Looker

From data quality to pipeline visualization and more, learn why Looker users rely on Ascend.io for faster time to analysis.

Ascend is a game changer for data analysts and Looker users that need to access and query many more types of data sources that are not available in relational formats. Check out this video to learn the top reasons why Looker users love Ascend.

Wondering how Looker and Ascend can specificially benefit your data team? Schedule a demo with an Ascend data engineer to learn more.

The Benefits of Looker + Ascend.io

Data Lineage

Easily answer the question “where did “net sales” come from?” thru visualization of lineage and all calculations/operations done to the data.

Pipeline Visualizations

Simplify complex queries into easy to understand sequential operations thru a modern DAG-based GUI that provides useful meta-data and state information at a glance.

Adaptive Ingestion

Keep data flowing by intelligently cascading schema changes to the data warehouse. Alert the data team with configurable levels of notifications when breaking changes are detected.

Access to Modern Technology including Data Science Tools

No matter your level of technical skill, we make the most modern capabilities accessible to you instantly! Play with Python, execute Spark jobs, begin your DS/ML journey with Notebooks or MLLib with no training.

Process More Data Sources

Native connections to common data sources and the ability to write small Python snippets to bring in anything else. Apply analytics to JSON, AVRO, XML, and custom byte encoded data in parallel. Even extract information from audio, documents, images by easily including ML/AI engines in the processing sequences.

Ensure Data Quality

Easily explore and validate your data before handing it off to your reports/analysts! Create a validation component that will run every time new data appears.

Looker with Ascend.io