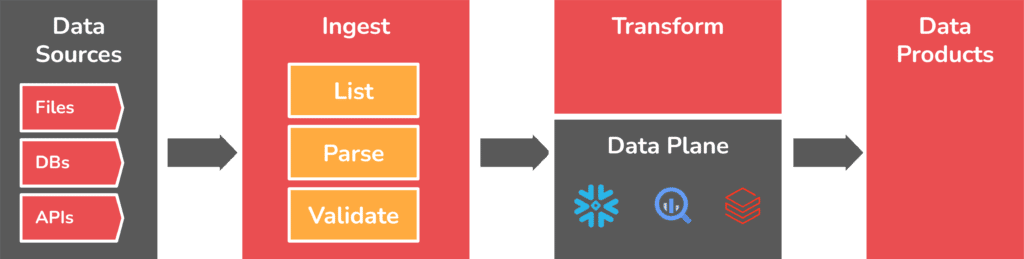

Let’s start with a quick recap of data products and pipelines:

Data products are the currency that creates business value in the digital age. These products are created with data pipelines, which are the modern-day successor to traditional ELT sequences. Data pipelines are developed and deployed on data pipeline platforms, and rely on separate tools that load data from sources of “raw data” found in applications, databases, APIs, and file systems within and from outside the business. Good so far?

So if data loading is such a fundamental step, why is Ascend giving it away for free? Is it an unsupported, cheap open-source pile of code that costs time and effort to operationalize? Does it even work?

To answer this question, let’s first clarify that Ascend ingestion is a fully integrated framework within the entire Data Automation platform. While this alone is a key improvement over other tools that operate data-loading silos, let’s look in detail at the inner workings that solve the ingestion problem much more thoroughly to produce time and cost savings at scale.

Three Phases of Automated Data Ingestion

To start with, Ascend ingestion is an omnivore, able to load any data from anywhere. Your source data can come from files in an object store (S3, ADLS, etc.), database tables, streaming messages, APIs, and SaaS products like Salesforce. You can also write custom code in the platform’s Python component to solve your off-the-wall data sources and one-off formats. Each data source is configured in the form of a Read Component in the platform, as documented here.

Under the hood, ingestion consists of three phases: List Objects, Parse, and Verification. Each phase is engineered to be extensible across all hyperscalers (AWS, GCP, and Azure), data clouds (Snowflake, Databricks, BigQuery, etc.), and all of your data sources.

These ingestion phases ride on top of a fully integrated connection manager that handles the physical connections to different source systems, and uses a credential vault to secure access. Since this is similar to how enterprise-grade secure system-to-system connections are commonly handled, we won’t delve into these details here.

Phase 1: List Objects

The first ingestion phase is List Objects. Here, the framework collects detailed metadata information about the data objects that it can ‘see’ in each source system. Within each Read component, you select the objects to ingest, and the platform takes care of the rest, including selecting an effective partition strategy. Unlike other ingestion platforms, Ascend does not transform the source into a proprietary schema. Instead, you have direct access to modify the source schema during a later transformation process.

Regardless of the type of data, Ascend DataAware™ control plane keeps track of new data generated in each source system as distinct “partitions,” and uses metadata about each partition to optimize costs and processing times. For large data sets, this intelligent partitioning strategy can produce dramatic processing time improvements and actual cost savings with no engineering required by you.

To be able to instantly detect change, the system uses a native checksum process to generate unique source “fingerprints,” as well as other data aging indicators provided by some of the source file systems. Data partitions that have no changes are ignored. Even your CDC data sources like PostgreSQL are taken care of.

Partitions with meaningful changes are passed to the Parse phase. Ascend automation saves you time and money over and over again by always avoiding the reprocessing of data.

Phase 2: Parse

After List Objects, each new partition of your data, and all of its metadata, moves to the Parse phase. To handle very large data sets with thousands of simultaneous partitions, the platform parallelizes the resulting Parse workloads. Reading each partition includes validating its structure (schema) and renewing its unique fingerprint. If the structure has changed, those partitions are automatically tagged for continued processing. The process works like this:

- Each partition is validated and data types adjusted to match your schema.

- Schema violations, invalid data, and other abnormal conditions are automatically detected.

- Conversion errors are processed according to your configuration by issuing a warning or stopping.

- Partitions with no meaningful changes are not processed further, to avoid reprocessing costs.

Throughout this process, the system collects statistics, metadata, and data profiling information. This active metadata is used by the Ascend DataAware™ technology to maintain incremental processing, and to drive efficiency and speed in your downstream transformations with additional performance and cost optimization techniques.

Phase 3: Verification

So what about data quality? On Ascend, data ingestion includes a native Verification phase that helps you nip data problems in the bud. This process applies a broad set of criteria immediately after Parse. Your verifications can include as a mix of NULL, range, or value tests, as well as custom statements expressed in SQL logic. You can validate the structure of a JSON document, compare values with your master data catalog, or verify record counts. You configure the pipeline process to stop or simply issue a warning when verification inconsistencies are discovered.

Since data quality is an enterprise-level concern, Ascend is compatible with your enterprise data quality tools through easily accessed verification results tables. You can also enhance your integration via our comprehensive API.

So where is your data staged? While in traditional data loading processes this is a key concern, on Ascend data staging is completely automated. Without a line of code, DataAware™ continually powers all interim staging throughout the loading process and all downstream transformations. Even data verification can be applied at each step in your pipeline, providing unprecedented visibility into the quality of your data. If you like, you can confirm the data locations through your console of AWS, GCP, Azure, Snowflake, Databricks, BigQuery, etc.

Enhanced Ascend Ingestion Features

In addition to the three phases, data ingestion on Ascend includes a wide range of powerful out-of-the-box capabilities.

Deployment Model

Since the entire ingestion framework is deployed in your cloud account as part of the Ascend platform, the data moves from its sources directly into your Snowflake, Databricks, BigQuery, etc., and does not pass through any external SaaS. Even during the transformation steps that follow, Ascend sends the jobs to be run inside your account, and does not pull your data out for any external processing.

Ingestion Scheduling

The ingestion framework includes several methods for determining when to start the ingestion process for each individual source. You can also trigger Ascend from your favorite enterprise orchestration or scheduling tool. Some supported ingestion triggers are:

- Scheduled using a clock

- Hourly, daily, weekly

- Scripted using the Platform API or Python SDK

- Executed from a shell script

- Defined as a chron expression

- Manually triggered from the UI

Automatic Schema Inference

As part of creating a Read component but before the three phases actually kick off, Ascend automatically executes a schema discovery to support your configuration process. For this step, Ascend has integrated a common open-source schema inference tool renowned for its reliability and scalability. You can extract this metadata via the API to add it to your enterprise data catalog.

Streaming Ingestion

With regard to streaming data sources, Ascend uses a micro-batching design to match the architecture of the DataAware™ control plane. When listening to a Kafka data stream, PubSub subscription, or other queue and stream-based technologies, Ascend collects messages that arrive over a specific time frame, then processes the resulting micro-batches. Like most batch-based sources, these configurable intervals establish the partition size and the timing for the downstream processing, where the data is transformed and harmonized into valuable data sets.

Registered Tables

This feature allows you to read data that has already been ingested into your data plane by Ascend or an external process. You simply let the DataAware™ control plane know about your data and it takes care of the rest. The same metadata processing occurs for these objects as any of your external ingestion sources. Since these objects already physically exist in the data plane, the framework doesn’t need to use resources for connection and write processing.

Final Thoughts About Ascend Ingestion and Next Steps

The Ascend team includes top experts in the use of GCP, Azure and AWS, as well as all the large data cloud providers including Snowflake, BigQuery, Databricks, and Redshift. Since Ascend operates seamlessly across all of them, your engineers and architects can be unhindered while selecting a data cloud. With Ascend, your team can focus on delivering value instead of keeping up with frequently changing infrastructure demands, upgrades, or best practices.

The Ascend ingestion framework is designed and built to save your data teams time and money. DataAware™ technology powers all three ingestion phases as well as all downstream transformation components, giving you a seamless end-to-end workflow. See for yourself by scheduling a demo with our data experts or creating a free developer tier account.